A New Identity and Financial Network

Introducing Worldcoin

Worldcoin was founded with the mission of creating a globally-inclusive identity and financial network, owned by the majority of humanity. If successful, Worldcoin could considerably increase economic opportunity, scale a reliable solution for distinguishing humans from AI online while preserving privacy, enable global democratic processes, and show a potential path to AI-funded UBI.

Worldcoin consists of a privacy-preserving digital identity network (World ID) built on proof of personhood and, where laws allow, a digital currency (WLD). Every human is eligible for a share of WLD simply for being human. World ID and WLD are currently complemented by World App, the first frontend to World ID and the Worldcoin Protocol, developed by the contributor team at Tools for Humanity (TFH).

“Proof of personhood” is one of the core ideas behind Worldcoin, and refers to establishing an individual is both human and unique. Once established, it gives the individual the ability to assert they are a real person and different from another real person, without having to reveal their real-world identity.

Today, proof of personhood is an unsolved problem on a global scale, making it difficult to vote online or distribute value on a large scale. The problem is even more pressing as increasingly powerful AI models will further amplify the difficulty of distinguishing humans from bots. If successful as part of Worldcoin, World ID could become a global proof of personhood standard.

Some of the core assumptions behind Worldcoin are:

- Proof of personhood is a missing and necessary digital primitive. This primitive will become more important as increasingly powerful AI models become available.

- Scalable and inclusive proof of personhood, for the first time, allows aligning the incentives of all network participants around adding real humans to the network. Bitcoin is issued to secure the Bitcoin network. Worldcoin is issued to grow the Worldcoin network, with security inherited from Ethereum.

- In a time of increasingly powerful AI, the most reliable way to issue a global proof of personhood is through custom biometric hardware.

The following dynamic Whitepaper shares the reasoning behind the implementation of the project as well as the current state and roadmap.

World ID

World ID is privacy preserving proof of personhood. It enables users to verify their humanness online while maintaining their privacy through zero-knowledge proofs, via a custom biometric device called the Orb. The Orb has been designed based on the realization that custom biometric hardware might be the only long term viable solution to issue AI-safe proof of personhood verifications. World IDs are issued on the Worldcoin protocol, which allows individuals to prove that they are human to any verifier (including web2 applications) while maintaining their privacy through zero-knowledge proofs. In the future, it should be possible to issue other credentials on the protocol as well.

World ID aspires to be personbound, meaning a World ID should only be used by the individual it was issued to. It should be very difficult to use by a fraudulent actor who stole or acquired World ID credentials. Further, it should always be possible for an individual to regain possession of a lost or stolen World ID.

Worldcoin Token

While network effects will ultimately come from useful applications being built on top of the financial and identity infrastructure, the token is issued to all network participants to align their incentives around the growth of the network. This is especially important early on to bootstrap the network and bypass the “cold start problem”. This could lead the Worldcoin token (WLD) to become the widest distributed digital asset.

World App

World App is the first frontend to World ID: it guides individuals through the verification with the Orb and custodies an individual’s World ID credentials and implements the cryptographic protocols to share those credentials with third parties in a privacy preserving manner. It is designed to provide frictionless access to global decentralized financial infrastructure. Eventually, there should be many different wallets integrating World ID.

How does Worldcoin Work?

Worldcoin revolves around World ID, a privacy-preserving global identity network. Using World ID, individuals will be able to prove that they are a real, unique human to any platform that integrates with the protocol. This will enable fair airdrops, provide protection against bots/sybil attacks on social media, and enable the fairer distribution of limited resources. Furthermore, World ID can also enable global democratic processes and novel forms of governance (e.g., via quadratic voting), and it may eventually support a path to AI-funded UBI.

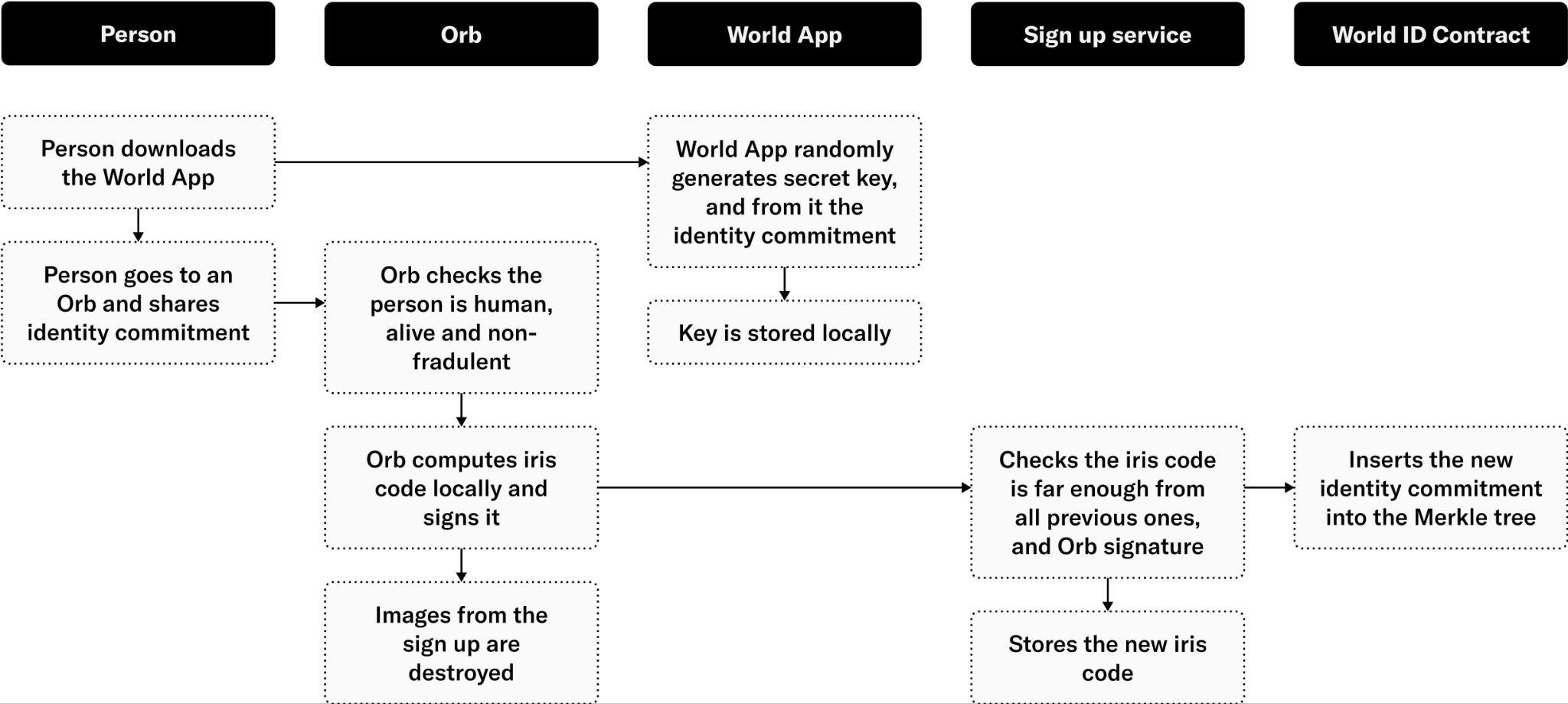

To engage with the Worldcoin protocol, individuals must first download World App, the first wallet app that supports the creation of a World ID. Individuals visit a physical imaging device called the Orb to get their World ID Orb-verified. Most Orbs are operated by a network of independent local businesses called Orb Operators. The Orb uses multispectral sensors to verify humanness and uniqueness to issue an Orb-verified World ID, with all images being promptly deleted on-device per default (absent explicit consent to Data Custody).

Potential Applications

Worldcoin could significantly increase equality of opportunity globally by advancing a future where everyone, regardless of their location, can participate in the global digital economy through universally-accessible decentralized financial and identity infrastructure. As the network grows, so should its utility.

Today, many interactions in the digital realm are not possible globally. The way humans transact value, identify themselves, and interact on the internet is likely to change fundamentally. With universal access to finance and identity, the following future becomes possible:

Finance

Owning & Transferring Digital Money: Sending money will be near instant and borderless, globally. Available to everyone. The world could be connected financially and everyone would be able to interact economically on the internet. The COVID relief fund for India, where over $400 million were raised in a short period of time by individuals around the world to support the country as a hint at what can be possible. Overall, this has the potential to connect people on a global scale unlike anything previously seen in human history.

Digital money is safer than cash, which can be more easily stolen or forged. This is especially important in crisis situations where instant cross-border financial transactions need to be possible, such as during the Ukrainian refugee crisis, where USDC was used to distribute direct aid. Additionally, digital money is an asset that individuals can own and control directly without having to trust third parties.

Identity

Keep the Bots Out: Bots on Twitter, spam messages, and robocalls are all symptoms of the lack of sound and frictionless digital identity. These issues are exacerbated by rapidly advancing AI models, which can solve CAPTCHAs and produce content that is convincingly "human". As services ramp up defenses against such content, it becomes essential that an inclusive and privacy-preserving solution for proof of personhood is available as public infrastructure. If every message or transaction included a "verified human" property, a lot of noise could be filtered from the digital world.

Governance: Currently, collective decision making in web3 largely relies on token-based governance (one token, one vote), which excludes some people from participating and heavily favors those with more economic power. A reliable sybil-resistant proof of personhood like World ID opens up the design space for global democratic governance mechanisms not just in web3 but for the internet. Additionally, for AI to maximally benefit all humans, rather than just a select group, it will become increasingly important to include everyone in its governance.

Intersection of Finance and Identity

Incentive Alignment: Coupons, loyalty programs, referral programs and more generally sharing value with customers is traditionally prone to fraud as the incentives for fraudulent actors are high. Frictionless and fraud resistant digital identity helps to align incentives and benefit both consumers and companies. This could even incept a new wave of companies owned in part by its users.

Equal Distribution of Scarce Resources: Crucial elements of modern society, including subsidies and social welfare, can be rendered more equitably by employing proof of personhood. This is particularly pertinent in developing economies, where social benefit programs confront the issue of resource capture—fake identities employed to acquire more than a person’s fair share of resources. In 2021, India saved over $500 million in subsidy programs by implementing a biometric-based system that reduced fraud. A decentralized proof of personhood protocol can extend similar benefits to any project or organization globally. As AI advances, fairly distributing access and some of the created value through UBI will play an increasingly vital role in counteracting the concentration of economic power. World ID could ensure that each individual registers only once and to guarantee equitable distribution.

Proof of Personhood (PoP)

Different applications have different requirements for PoP. For high-stakes use cases such as global UBI, the democratic governance of AI and the Worldcoin project, a highly secure and inclusive PoP mechanism to prevent multiple registrations is needed. Therefore, the Worldcoin developer community with the Worldcoin Foundation is laying the foundations for a high-assurance PoP mechanism with World ID. World IDs are issued to every unique human through biometric verification devices, with the first such device being the Orb. The following sections walk through the fundamental building blocks of PoP and how those are implemented in the context of World ID.

Building Blocks

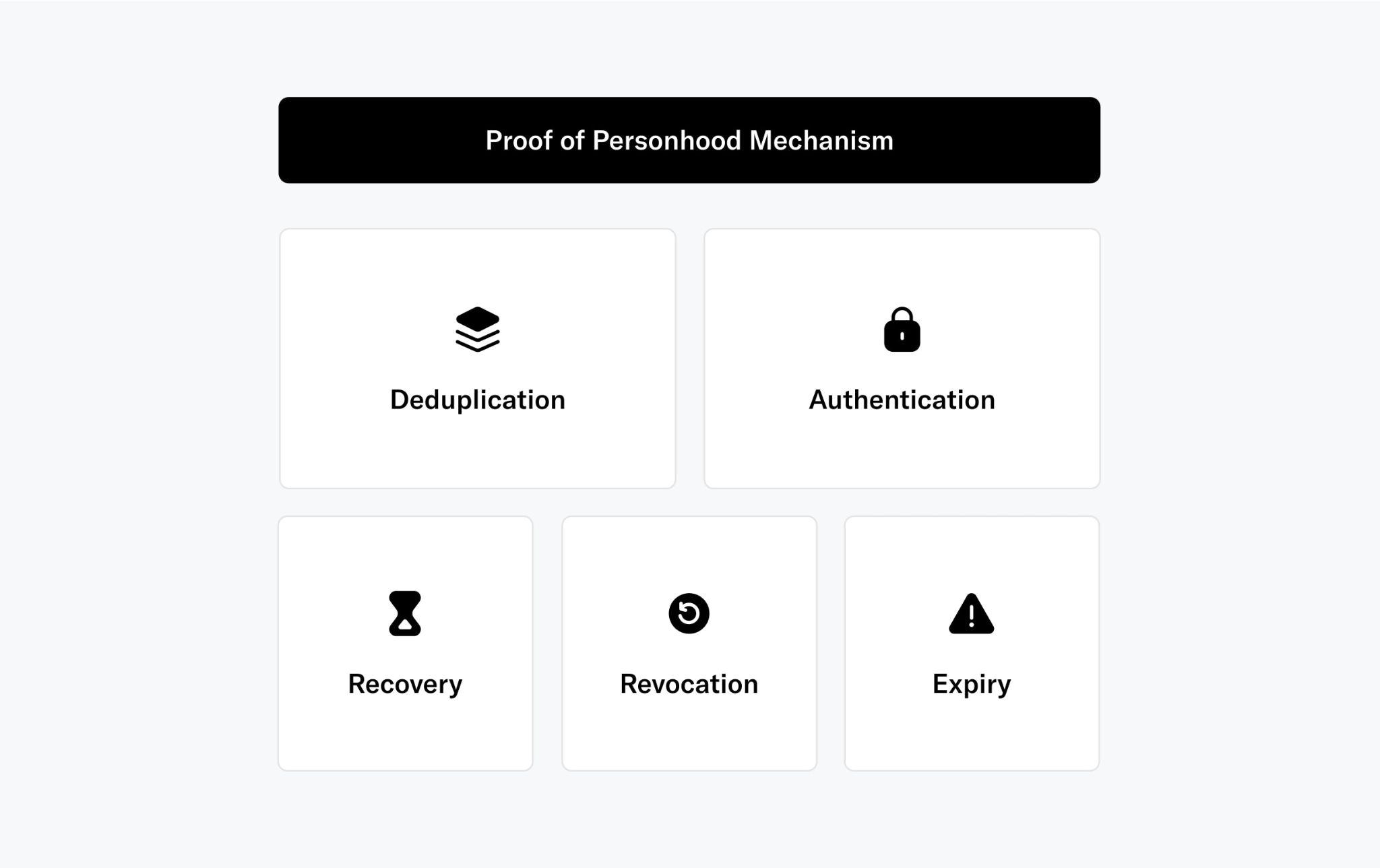

On a high level, there are several building blocks that are required for an effective PoP mechanism. Those include “deduplication” to ensure everyone can only verify once, “authentication” to ensure only the legitimate owner of the proof of personhood credential can use it and “recovery” in case of lost or compromised credentials. This section discusses those building blocks on a high level.

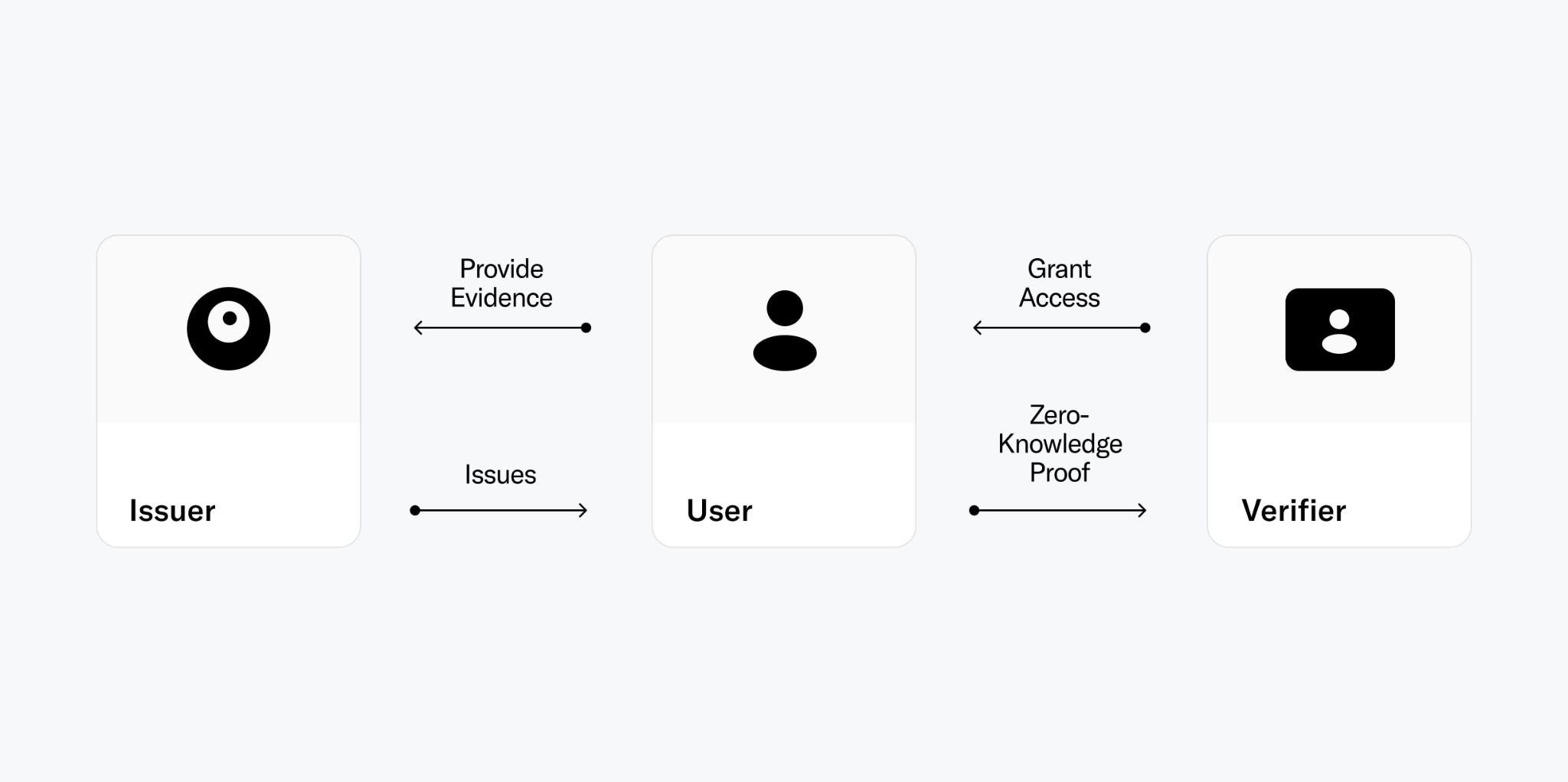

A proof of personhood mechanism consists of three different actors and the data that they exchange.

For the context of this section, these terms are defined as follows:

- User: An individual seeking to prove specific claims about herself in order to access certain resources or more generally qualify for certain actions. Within the context of a PoP protocol those claims are related to proving uniqueness and personhood.

- Credential: A collection of data that serves as proof for particular attributes of the user that indicate the user is a human being. This could be a range of things, from the possession of a valid government ID to being verified as human and unique through biometrics.

- Issuer: An trusted entity that affirms certain information about the user and grants them a PoP credential, which enables the user to prove their claims to others.

- Verifier: An entity that examines a user's PoP credential and checks its authenticity as part of a verification process to grant the user access to certain actions.

Certain interactions between users, issuers and verifiers, like deduplication, recovery and authentication are important building blocks for a functional PoP mechanism. This section gives a high level overview of the building blocks of a general PoP mechanism. Detailed explanations on how those are implemented with World ID follow in later sections.

Deduplication

For a PoP to be useful, it needs to have a notion of uniqueness. If the PoP can be acquired multiple times and transferred to fraudulent actors or bots, it cannot be trusted and fails to serve its purpose. Therefore, a PoP mechanism needs to deduplicate between the users that are issued a proof of personhood credential. This is the hardest challenge for any PoP mechanism.

Authentication

To make PoP credentials useful it needs to be hard to transfer credentials to someone else (e.g. bots) and for them to use the credentials to prevent fraud. This is especially important to protect individuals who may be unaware of the consequences of selling their credentials. This challenge is inherent in identity systems as a whole. Authentication can prevent fraudsters from using credentials, even if the respective user is unaware or attempts to collaborate with the fraudster.

When issuing PoP credentials, issuers only need to validate that someone is indeed a unique person. Beyond that, no additional personal information is required. However, each PoP credential needs to be uniquely tied to a specific person. Even if credentials are not transferable, wallets and phones can be transferred. Therefore, for high-integrity use cases, it is crucial to authenticate the user as the rightful owner of the PoP credential. This prevents the unauthorized use of credentials. A similar approach is followed during e.g. airline boarding, where an airline gate assistant verifies both the possession of a valid travel document and the consistency of the individual's identity with the document.

Recovery

If the user has lost access to their credentials or their credentials have been compromised, effective recovery mechanisms are needed. However, in setups where users are responsible for managing their own keys, this is a significant challenge. In the context of a PoP protocol, there are multiple mechanisms that can be used:

- Restoring a User-Managed Backup: The simplest method for credential recovery involves storing encrypted user-managed backups of their credentials. This allows users to restore their credentials, such as on a new device when their previous one is lost.

- Social Recovery: If no user-managed backup exists, but the user has set up social recovery, the credentials can be recovered through the help of friends and family.

- Recover Keys: If neither backups nor social recovery are available, the user needs to return to the issuer to regain access to their original credential. The user needs to prove to the issuer that they are the legitimate owner of a certain credential. Upon successful authentication, the issuer grants access to the credential again. This process is similar to obtaining a new government ID after losing the previous one. The user can get a new ID with the same information on it1. This process may not be viable for some credentials: for example, if a private key was generated by the user and only the public key is recorded by the issuer (e.g. World ID).

- Re-Issuance: In situations where regaining access to the original credential through the issuer is not possible or undesirable (e.g. due to identity theft). In that case, re-issuance provides a way to invalidate the previous credential and issue a new credential. This can be compared to freezing a credit card and ordering a new one. Importantly, the availability of a re-issuance mechanism to rotate keys makes the illegitimate acquisition of other individuals’ PoP credentials financially unviable from a game-theoretic perspective. The true holder of the credential can always recover their credentials and invalidate the bought/stolen credential. However, this does not protect against all cases of identity transfer, especially those that involve collusion or coercion.

Two other properties add to the integrity of a PoP mechanism:

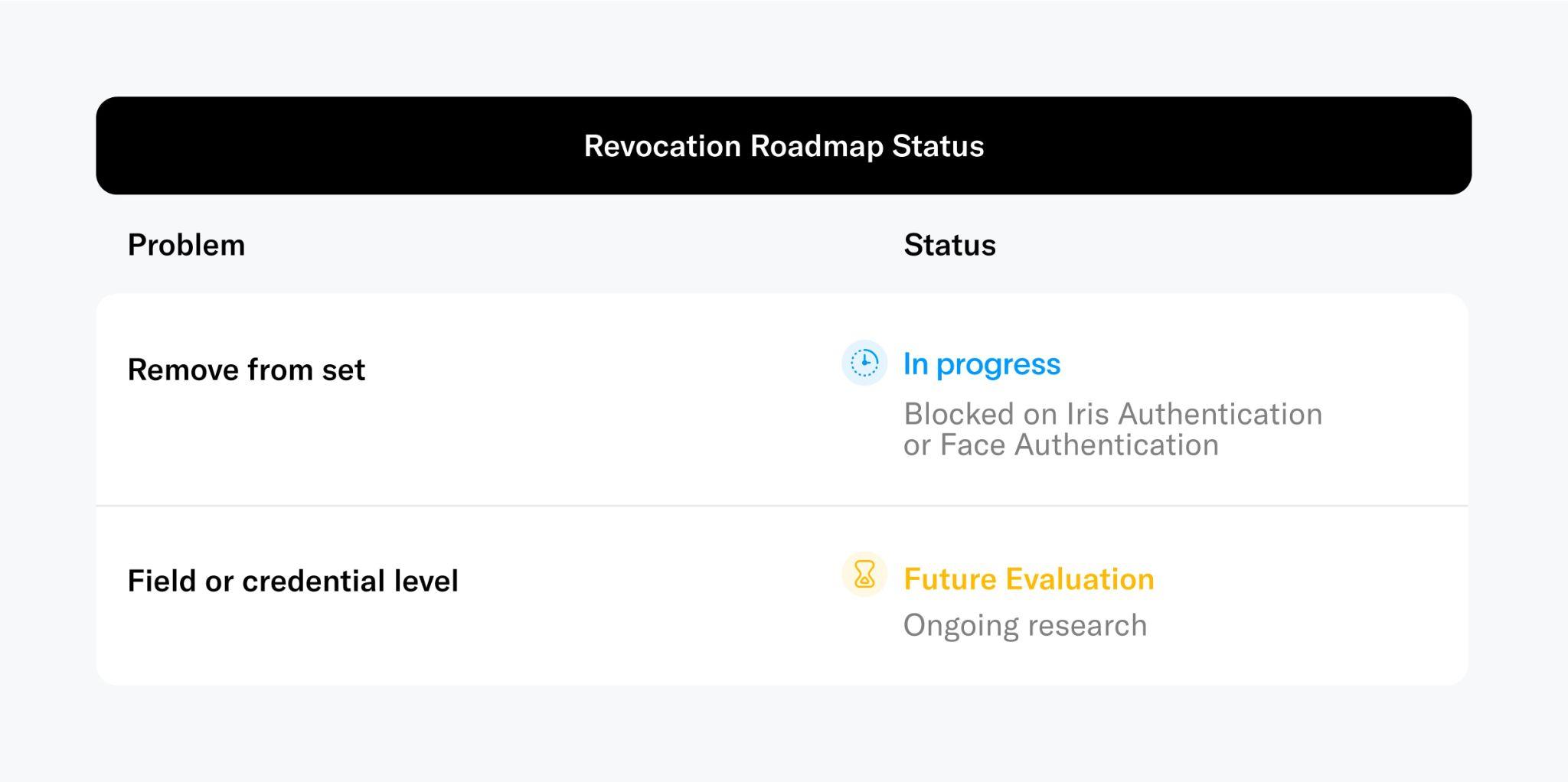

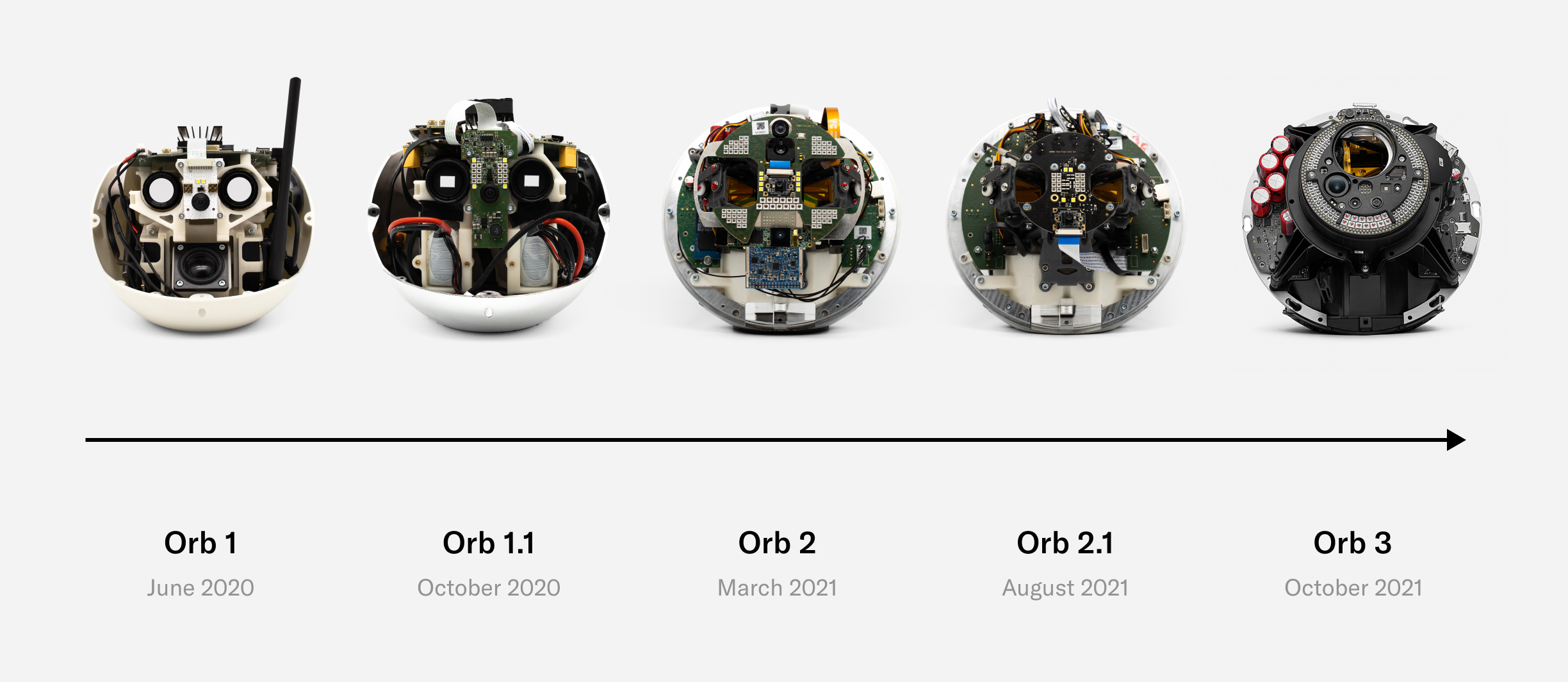

Revocation

While the hope is that all participants act with integrity, this cannot be assumed. In instances where an issuer is found to be compromised or malicious, the impact can be mitigated by issuers or developers removing affected PoP credentials from their list of accepted credentials. If the issuance of a credential is decentralized across multiple issuing locations and only a subset is affected, the respective subset could be revoked by the issuing authority itself. An example in terms of today's credentials could be a university granting a diploma to a person who hasn't met all the criteria. If the fraud is identified, the diploma is revoked.

Expiry

The efficacy of security mechanisms degrades over time and new mechanisms are continuously being developed. As a result, many identity systems incorporate a predefined expiry date to credentials at the point of issuance. An example are passports. Although expiry is not required for a PoP mechanism to work, its inclusion can increase the PoP’s integrity.

The combination of the mentioned building blocks make up for a functional proof of personhood mechanism. An exemplary smartphone App is shown in the following figure.

Solving PoP at Scale

Based on these high level building blocks, several requirements can be deduced to evaluate different approaches to a global PoP mechanism:

- Inclusivity and scalability: A global PoP should be maximally inclusive, i.e. available to everyone. This means the mechanism should be able to distinguish between billions of people. There should be a feasible path to implementation at a global scale and people should be able to participate regardless of nationality, race, gender or economic means.

- Fraud Resistant: For a global proof of personhood, the important part is not “identification” (i.e. “is someone who they claim they are?”), but rather negative identification (i.e.“has this person registered before?”). This means that fraud prevention, in terms of preventing duplicate sign-ups, is critical. A significant amount of duplicates would severely restrict the design space of possible applications and make it impossible to treat all humans equally. This would have severe implications for use cases like a fair token distribution, democratic governance, reputation systems like credit scores, and welfare (including UBI).

- Personbound: Once a proof of personhood is issued, it should be personbound: it should be hard to sell or steal (i.e. transfer) and hard to lose. Note that if the PoP mechanism is designed properly, this wouldn’t prevent pseudonymity. This leads to the requirement that the PoP mechanism should allow for authentication in a way that makes it hard for fraudsters to impersonate the legitimate individual. Further, even if the individual lost all information, irrespective of any past actions, it should always be possible for them to recover.

Those cover the requirements that can be deduced from the required building blocks of a proof of personhood mechanism. However, there are further important requirements that can be deduced from the values inherent to the Worldcoin project:

- Decentralization: The issuance of a global PoP credential is foundational infrastructure that should not be controlled by a single entity to maximize resilience and integrity.

- Privacy: The PoP mechanism should preserve the privacy of individuals. Data shared by individuals should be minimized. Users should be in control of their data.

Mechanisms to Verify Uniqueness Among Billions

Based on the above requirements, this section compares different mechanisms to establish a global PoP mechanism in the context of the Worldcoin project.

Online accounts

The simplest attempt to establish PoP at scale involves using existing accounts such as email, phone numbers and social media. This method fails, however, because one person can have multiple accounts on each kind of platform. Further, accounts aren’t personbound i.e. they can be easily transferred to others. Also, the (in)famous CAPTCHAs, which are commonly used to prevent bots, are ineffective here because any human can pass multiple of them. Even the most recent implementations2that basically rely on an internal reputation system, are limited.

In general, current methods for deduplicating existing online accounts (i.e. ensuring that individuals can only register once), such as account activity analysis, lack the necessary fraud resistance to withstand substantial incentives. This has been demonstrated by large-scale attacks targeting even well-established financial services operations.

Official ID verification (KYC)

Online services often request proof of ID (usually a passport or driver's license) to comply with Know your Customer (KYC) regulations. In theory, this could be used to deduplicate individuals globally, but it fails in practice for several reasons.

KYC services are simply not inclusive on a global scale; more than 50% of the global population does not have an ID that can be verified digitally. Further, it is hard to build KYC verification in a privacy–preserving way. When using KYC providers, sensitive data needs to be shared with them. This can be solved using zkKYC and NFC readable IDs. The relevant data can be read out by the user's phone and be locally verified as it is signed by the issuing authority. Proving unique humanness can be achieved by submitting a hash based on the information of the user’s ID without revealing any private information. The main drawback of this approach is that the prevalence of such NFC readable IDs is considerably lower than that of regular IDs.

Where NFC readable IDs are not available, ID verification can be prone to fraud—especially in emerging markets. IDs are issued by states and national governments, with no global system for verification or accountability. Many verification services (i.e. KYC providers) rely on data from credit bureaus that is accumulated over time, hence stale, without the means to verify its authenticity with the issuing authority (i.e. governments), as there are often no APIs available. Fake IDs, as well as real data to create them, are easily available on the black market. Additionally, due to their centralized nature, corruption at the level of the issuing and verification organizations cannot be eliminated.

Even if the authenticity of provided data can be verified, it is non-trivial to establish global uniqueness among different types of identity documents: fuzzy matching between documents of the same person is highly error-prone. This is due to changes in personal information (e.g. address), and the low entropy captured in personal information. A similar problem arises as people are issued new identity documents over time, with new document numbers and (possibly) personal information. Those challenges result in large error rates both falsely accepting and rejecting users. Ultimately, given the current infrastructure, there is no way to bootstrap global PoP via KYC verification due to a lack of inclusivity and fraud resistance.

Web of Trust

The underlying idea of a “web of trust” is to verify identity claims in a decentralized manner.

For example, in the classic web of trust employed by PGP, users meet for in-person “key signing parties” to attest (via identity documents) that keys are controlled by their purported owners. More recently, projects like Proof of Humanity are building webs of trust for Web3. These allow decentralized verification using face photos and video chat, avoiding the in-person requirement.

Because these systems heavily rely on individuals, however, they are susceptible to human error and vulnerable to sybil attacks. Requiring users to stake money can increase security. However, doing so increases friction as users are penalized for mistakes and therefore disincentivized to verify others. Further, this decreases inclusivity as not everyone might be willing or able to lock funds. There are also concerns related to privacy (e.g. publishing face images or videos) and susceptibility to fraud using e.g. deep fakes, which make these mechanisms fail to meet some of the design requirements mentioned above.

Social graph analysis

The idea of social graph analysis is to use information about the relationships between different people (or the lack thereof) to infer which users are real.

For example, one might infer from a relationship network that users with more than 5 friends are more likely to be real users. Of course, this is an oversimplified inference rule, and projects and concepts in this space, such as EigenTrust, Bright ID and soulbound tokens (SBTs) propose more sophisticated rules. Note that SBTs aren’t designed to be a proof of personhood mechanism but are complementary for applications where proving relationships rather than unique humanness is needed. However, they are sometimes mentioned in this context and are therefore relevant to discuss.

Underlying all of these mechanisms is the observation that social relations constitute a unique human identifier if it is hard for a person to create another profile with sufficiently diverse relationships. If it is hard enough to create additional relationships, each user will only be able to maintain a single profile with rich social relations, which can serve as the user's PoP. One key challenge with this approach is that the required relationships are slow to build on a global scale, especially when relying on parties like employers and universities. It is a priori unclear how easy it is to convince institutions to participate, especially initially, when the value of these systems is still small. Further, it seems inevitable that in the near future AI (possibly assisted by humans acquiring multiple “real world” credentials for different accounts) will be able to build such profiles at scale. Ultimately, these approaches require giving up the notion of a unique human entirely, accepting the possibility that some people will be able to own multiple accounts that appear to the system as individual unique identities.

Therefore, while valuable for many applications, the social graph analysis approach also does not meet the fraud resistance requirement for PoP laid out above.

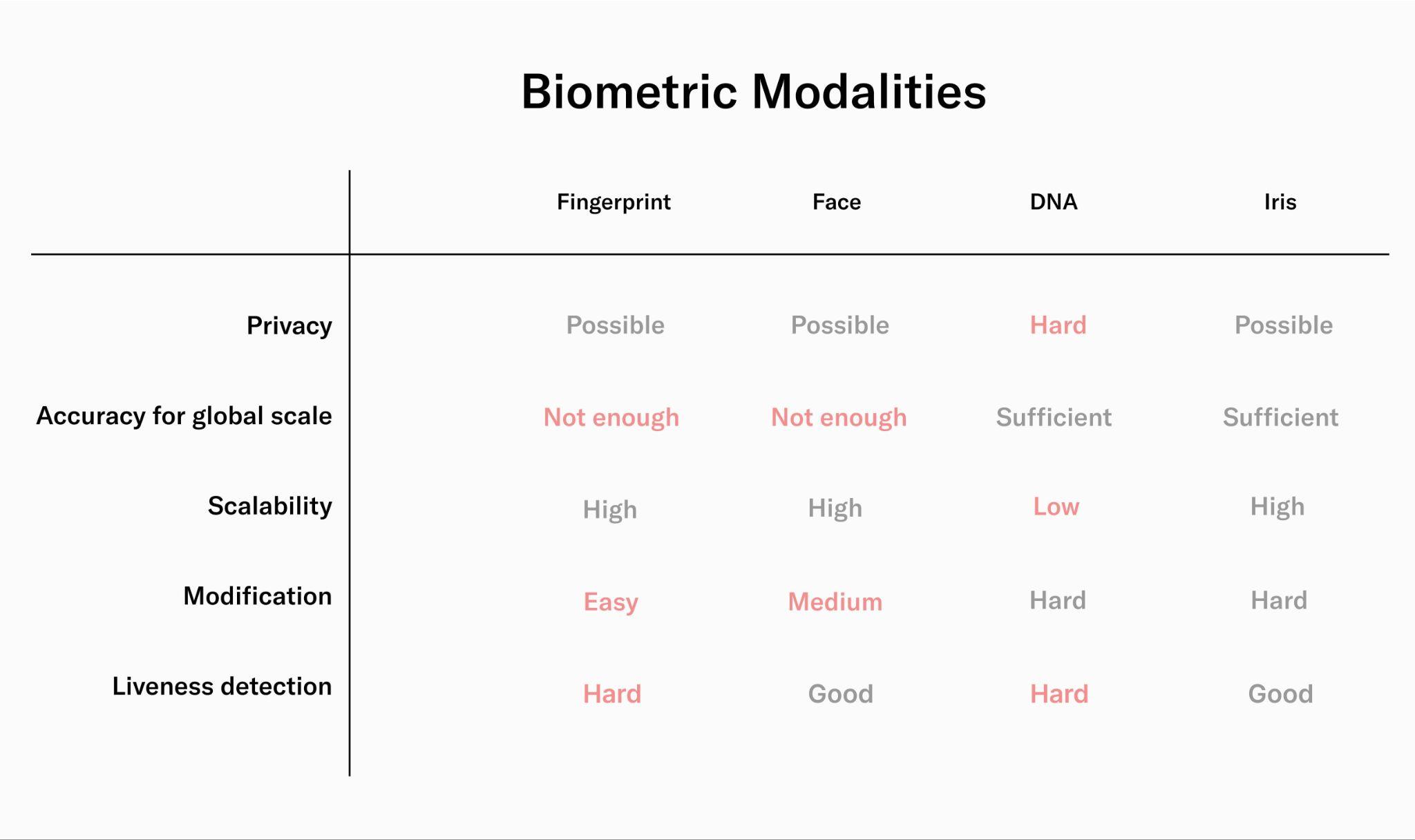

Biometrics

Each of the systems described above fails to effectively verify uniqueness on a global scale. The only mechanism that can differentiate people in non-trusted environments is their biometrics. Biometrics are the most fundamental means to verify both humanness and uniqueness. Most importantly, they are universal, enabling access irrespective of nationality, race, gender or economic means.Additionally, biometric systems can be highly privacy-preserving if implemented properly. Further, biometrics enable the previously mentioned building blocks by providing a recovery mechanism (that works even if someone has forgotten everything) and can be used for authentication. Therefore, biometrics also enable the PoP credential to be personbound.

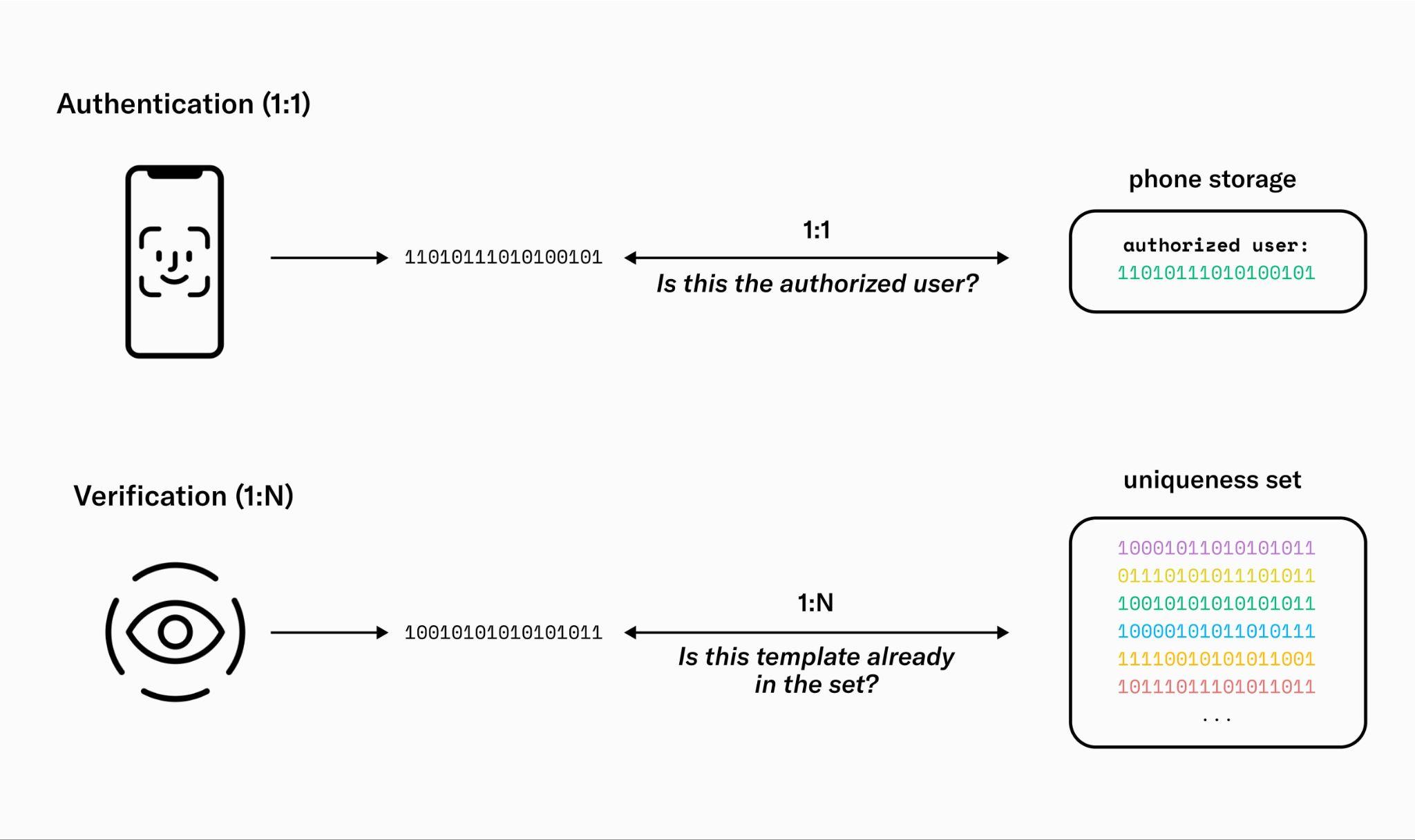

Different systems have different requirements. Authenticating a user via FaceID as the rightful owner of a phone is very different from verifying billions of people as unique. The main differences in requirements relate to accuracy and fraud resistance. With FaceID, biometrics are essentially being used as a password, with the phone performing a single 1:1 comparison against a saved identity template to determine if the user is who they claim to be. Establishing global uniqueness is much more difficult. The biometrics have to be compared against (eventually) billions of previously registered users in a 1:N comparison. If the system is not accurate enough, an increasing number of users will be incorrectly rejected.

The error rates and therefore the inclusivity of the system are majorly influenced by the statistical characteristics of the biometric features being used. Iris biometrics outperform other biometric modalities and can achieve false match rates beyond (or one false match in 40 trillion). This is several orders of magnitude more accurate than the current state of the art in face recognition. Moreover, the structure of the iris exhibits remarkable stability over time.

Furthermore, the iris is hard to modify. Modifying fingerprints through cuts is easy, while imaging them accurately can be difficult, as the ridges and valleys can wear off over time. Moreover, using all ten fingerprints for deduplication or combining different biometric modalities is vulnerable to combinatorial attacks (e.g. by combining fingerprints from different people). DNA sequencing could in theory provide high enough accuracy, but DNA reveals a lot of additional private information about the user (at least to the party that runs the sequencing). Additionally, it is hard to scale from a cost perspective and implementing reliable liveness detection measures is hard. Facial biometrics offers significantly better liveness detection compared to DNA sequencing. However, compared to iris biometrics, the accuracy of facial recognition is much lower. This would result in a growing number of erroneous collisions as the number of registered users increases. Even under optimal conditions, at a global scale of billions of people, over ten percent of legitimate new users would be rejected, compromising the inclusivity of the system.

Therefore, based on the outlined trade-offs of different biometric modalities, iris recognition is the only one which is suitable for global verification of uniqueness in the context of the Worldcoin project.

World ID: Implementing PoP at Scale

Based on the conclusion that the only path to verify uniqueness on a global scale is iris biometrics, Tools for Humanity built a custom biometric device, called the Orb. This device issues an AI-safe3 PoP credential called World ID. The Orb is built from the ground up to verify humanness and uniqueness in a fair and inclusive manner.

The issuance of World ID is privacy-preserving, as the humanness check happens locally and no images need to be saved (or uploaded) by the issuer. Using World ID reveals minimal information about the individual, as the protocol employs zero-knowledge proofs. The vision for the device is for its development, production and operation to be decentralized over time such that no single entity will be in control of World ID issuance.

The following section explains the previously mentioned building blocks for an effective proof of personhood mechanism:

- Deduplication

- Authentication

- Recovery

- Revocation

- Expiry

and how they are implemented in the context of World ID.

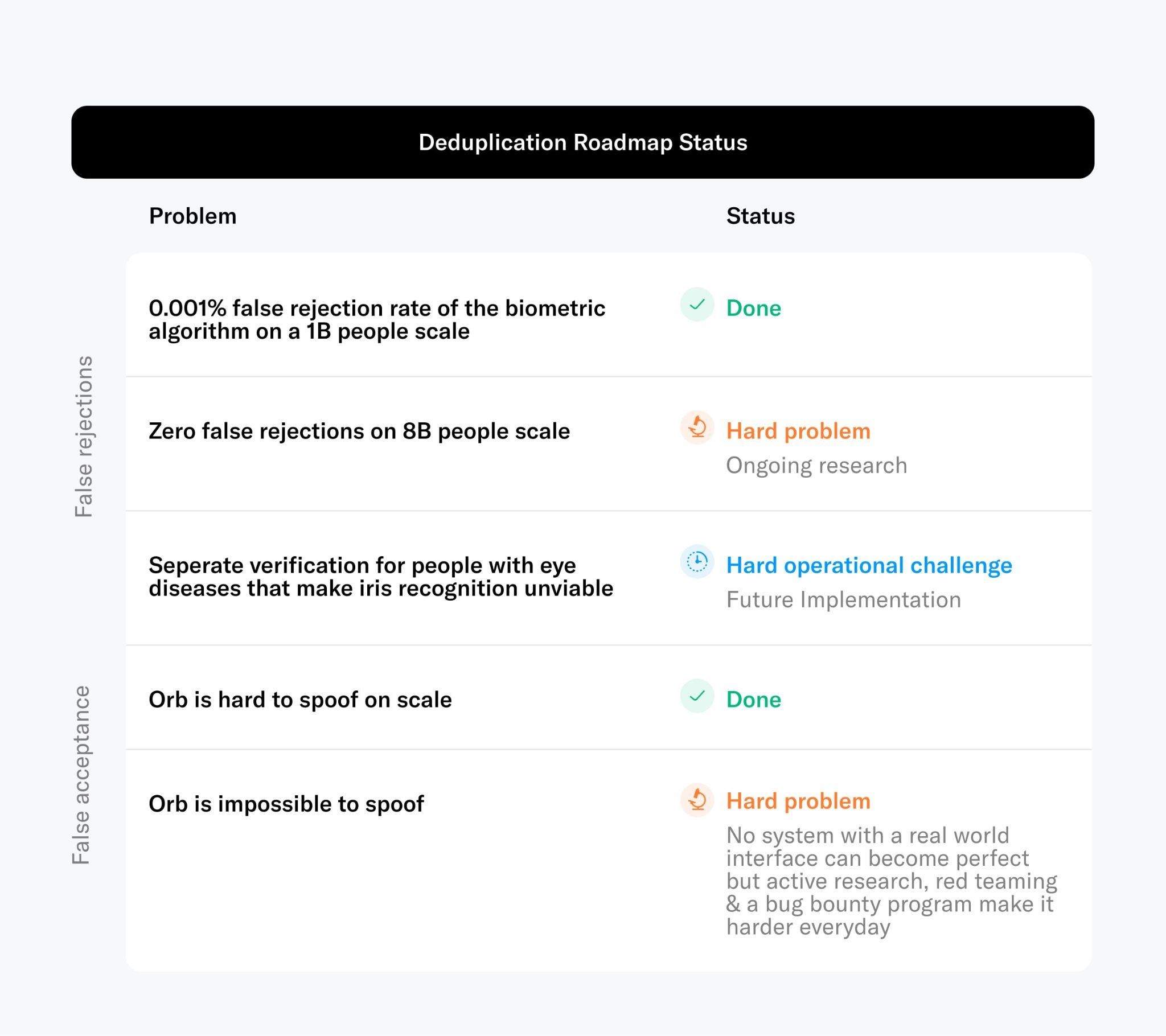

Deduplication

The hardest part for an inclusive yet highly secure PoP mechanism is to make sure every user can receive exactly one proof of personhood. Based on the previous evaluation iris biometrics are the best means to accurately verify uniqueness on a global scale (see limitations).

The other potential error inherent to biometric algorithms is the false acceptance of a user. The false acceptance rate is largely dependent upon the system's capacity to detect presentation attacks, which are attempts to deceive or spoof the verification process. While no biometric system is entirely impervious to such attacks, the important metric is the effort required for a successful attack. This consideration was fundamental to the conception of the Orb. Developing the Orb was a decision that did not come lightly. It represented a high-cost endeavor. However, from first principles, it was required to build the most inclusive yet secure verification of humanness and uniqueness. The Orb is designed to verify uniqueness with high accuracy, even in hostile contexts where the presence of malicious actors cannot be excluded. To accomplish this, the Orb is equipped with every viable camera sensor spanning the electromagnetic spectrum, complemented by suitable multispectral illumination. This enables the device to differentiate between fraudulent spoofing attempts and legitimate human interactions with a high degree of accuracy. The Orb is further equipped with a powerful computing unit to run several neural networks concurrently in real-time. These algorithms operate locally on the Orb to validate humaneness, while safeguarding user privacy. While no hardware system interacting with the physical world can achieve perfect security, the Orb is designed to set a high bar, particularly in defending against scalable attacks. The anti-fraud measures integrated into the Orb are refined constantly.

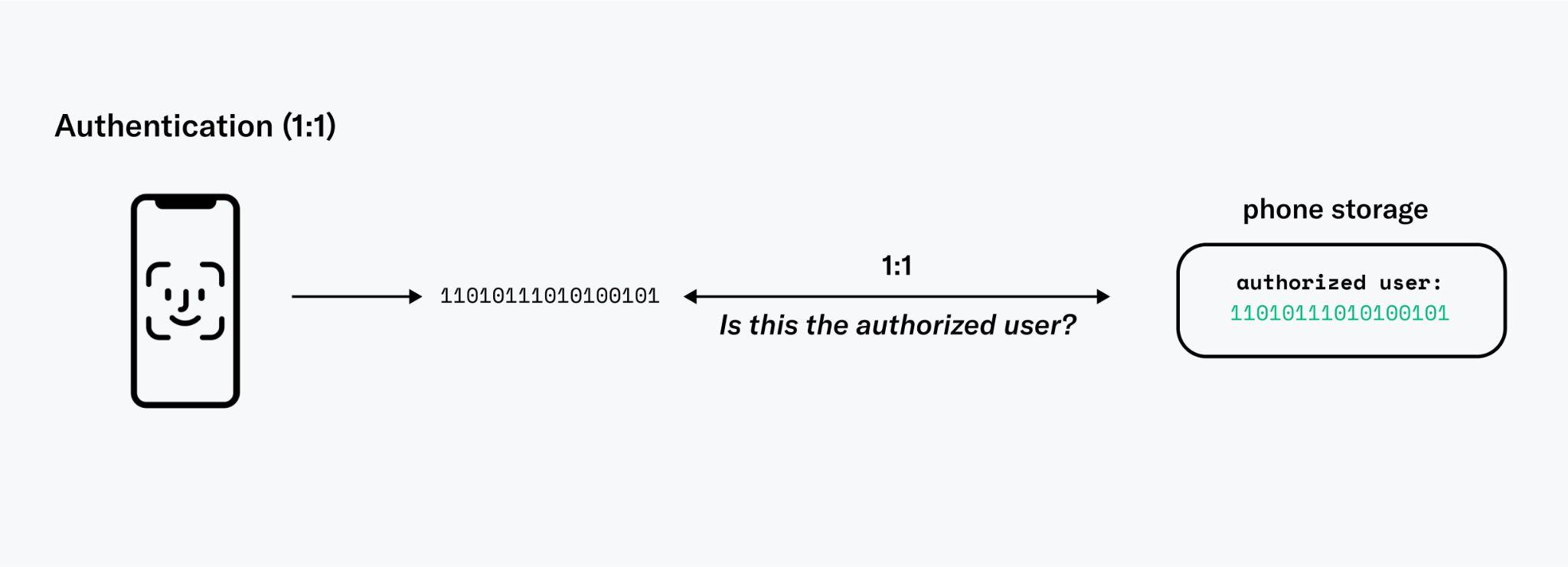

Authentication

Authentication seeks to ensure that only the legitimate owner of a World ID issued by the Orb is able to authenticate themself beyond proving that they own the keys. This plays a critical role in preventing the selling or stealing of World IDs. Within the scope of World ID, there are two primary mechanisms at one's disposal. Selecting the appropriate mechanism is up to the verifier, as each mechanism offers varying degrees of assurance and friction.

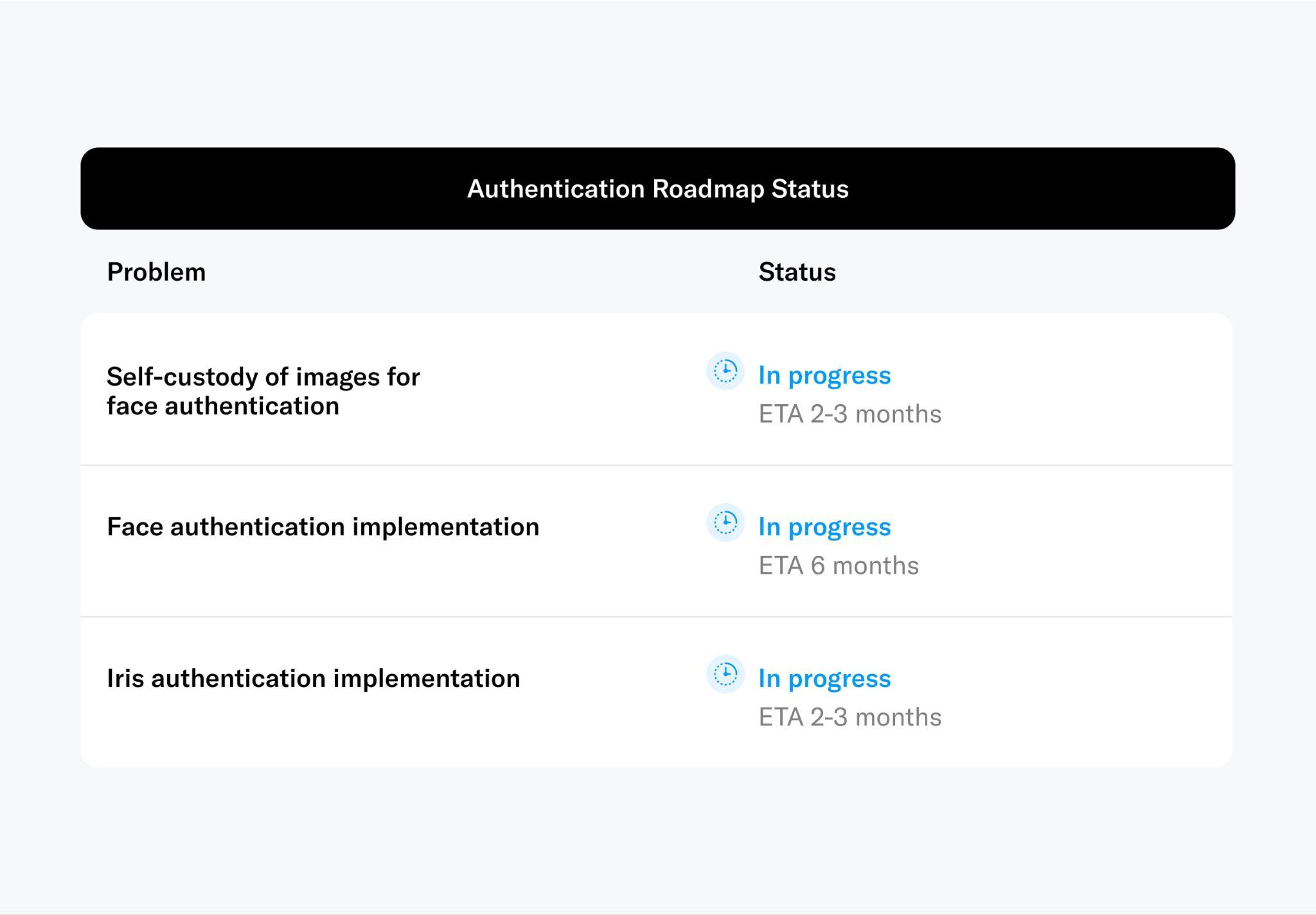

Face Authentication

Face-based authentication is similar to Apple's Face ID. Authentication involves a 1:1 comparison with a pre-existing template that is stored on the user's phone, which requires considerably lower levels of accuracy in contrast to the 1:N global verification of uniqueness4 that the Orb is performing. Therefore, the entropy inherent to facial features is sufficient. To enable this feature, an encrypted embedding of the user's face, signed by the Orb, would need to be end-to-end encrypted and transmitted to the World ID wallet on the user's mobile device. Subsequently, facial recognition, performed locally on the user’s device in a fashion similar to Face ID, could be used to authenticate users, thereby ensuring that only the person to whom the World ID was originally issued can use it for authentication purposes.

This mechanism facilitates the extension of the secure hardware guarantees from the Orb to the user's mobile device. However, given that the user's device is not intrinsically trusted, there is no absolute assurance that the appropriate code is being executed nor that the camera input can be trusted. To increase security, ongoing research is investigating Zero Knowledge Machine Learning (ZKML) on mobile devices. Nevertheless, in the absence of custom hardware, this approach cannot provide the same security guarantees as the Orb. Therefore, face authentication on the user's device should be reserved for applications with lower stakes.

While this feature is not yet implemented, it is expected to be released later this year. The first step for the implementation is for the Orb to send an end-to-end encrypted face embedding to the user's phone where it can later be compared against a selfie. The self-custody of face images is a requirement for face authentication and therefore determines who can later on participate in face authentication. Therefore, this feature has a high priority on the roadmap.

Iris Authentication

This is conceptually similar to face authentication with the difference that a user needs to return to an Orb, presenting a specific QR code generated by the user’s World ID wallet. This process validates the individual as the rightful owner of their World ID. Using iris authentication through the Orb increases security.

This authentication mechanism can be compared with, for example, physically showing up to a bank or notary to authenticate certain transactions. Although inconvenient, and therefore rarely required, it provides increased security guarantees. This feature is under active development and is expected to be released in the coming months.

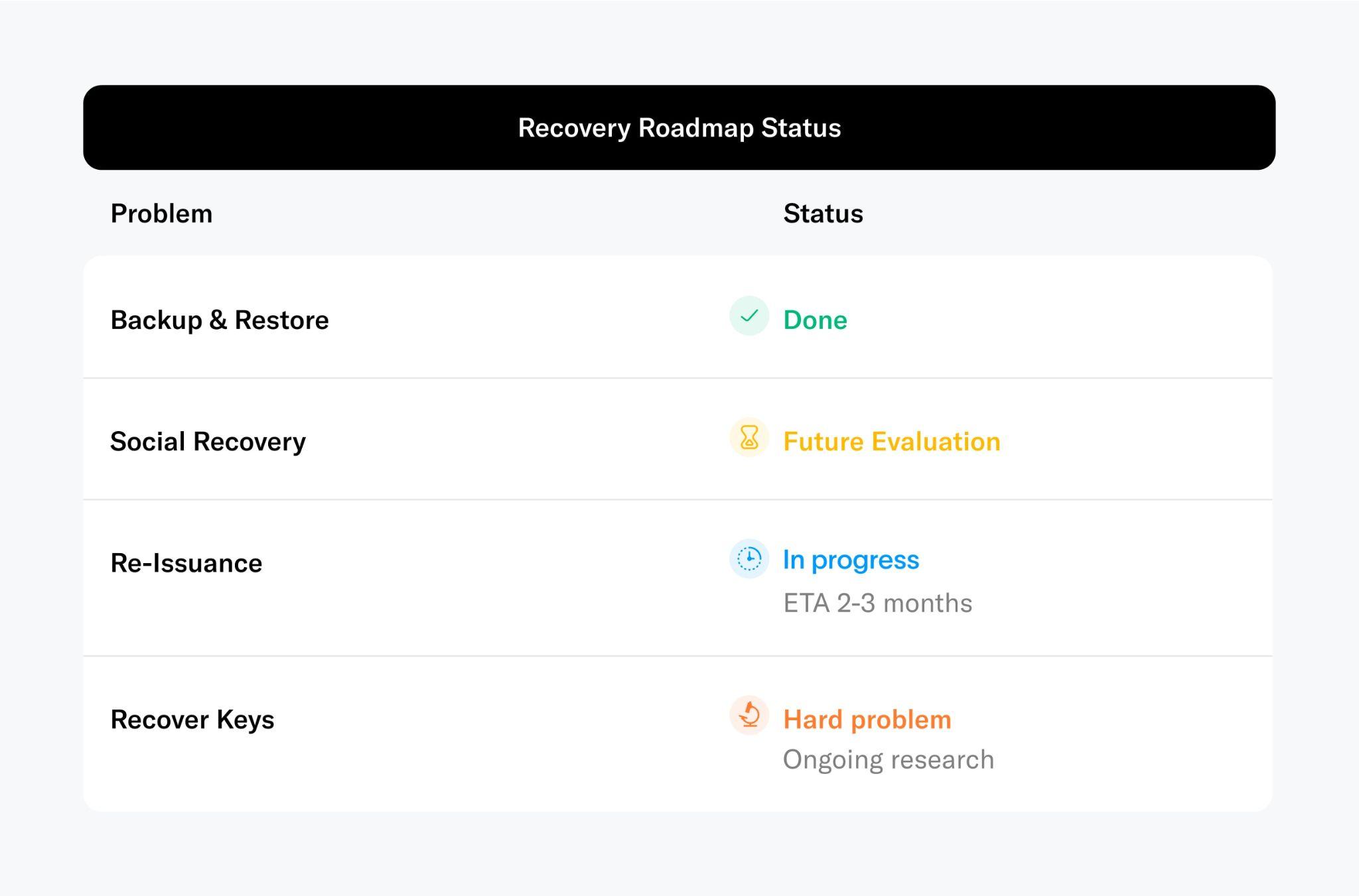

Recovery

The simplest way to restore World ID is via a backup. Social recovery is not implemented today but is likely to be explored in the future. The most important recovery mechanism for Orb-based proof of personhood is reissuance. If the user has lost access or the World ID has been compromised by a fraudulent actor, individuals can get their World ID re-issued by returning to the Orb, without the need to remember a password or similar information.

It is critical to understand, however, that the recovery facilitated by biometrics exclusively refers to the World ID. Neither other credentials held by the user's wallet nor the wallet itself can be recovered, due to security considerations.

The initial implementation is planned to be realized through key rotation, which will be released soon. Notably, use cases that require long-lasting nullifiers5 such as reputation or single-claim rewards will be limited due to the nullifier’s potential reset through recovery. This is also discussed in the limitations section. However, this limitation does not impact the 'humanness' attestation; for instance, the verification of an account on a continuous basis through sessions, or time-bounded votes where only participants whose latest recovery preceded the beginning of the voting period are allowed. To enable key recovery requires solving hard research challenges to preserve privacy.

Revocation

In the event of a compromised Orb, malicious actors could theoretically generate counterfeit World IDs6. If it is determined by the community that an issuer is acting inappropriately or a device is compromised, the Worldcoin Foundation, in alignment with the prevailing governance structure, can "deny list" World IDs linked to a specific issuer or device for its own purposes, while other application developers can implement their own measures. Users who inadvertently find themselves impacted can simply get their World ID re-issued by any other Orb. More details around the mechanism can be found in the decentralization.

Expiry

Even in the absence of tangible fraudulent activities, a device could retrospectively be identified by the community as vulnerable, or simply as having outdated security standards. In such instances, in line with the governing principles of the Foundation, World IDs can be subjected to a set expiry. This essentially amounts to a revocation process but with a predefined expiry period that affords individuals ample time for re-verification, such as one year. Further, in accordance with its governance, the Foundation could eventually decide to expire verifications after a set period of time to further strengthen the integrity of the PoP mechanism in the interest of all participants.

Further Research

Despite the defensive measures outlined in this section, which significantly raise the threshold for fraudulent activities and can likely limit its impact beyond any existing scalable proof of personhood verification mechanism, it is important to recognize their inability to completely protect against all threats, such as collusion or other attempts to circumvent the one-person-one-proof principle (i.e. bribing others to vote a particular way). To further raise the bar, innovative ideas and research in mechanism design will be necessary.

Footnotes

-

Possibly except for the validity date ↩

-

In recent implementations virtually all major providers switched from “labeling traffic lights” to the so-called silent CAPTCHAs (e.g. reCaptcha v3) ↩

-

In this context, AI-safe refers to a process that’s hard for AI models. It’s assumed, for example, that spoofing the Orb is significantly harder for AI than performing a CAPTCHA. ↩

-

where N is the total number of previously verified users ↩

-

In the context of World ID, each holder has a unique nullifier for themselves in each application. This nullifier is what enables sybil resistance while preserving privacy as verifiers can use such nullifiers to prevent multiple registrations. ↩

-

the Orb's secure computing environment was designed to make such compromises extremely difficult ↩

Technical Implementation

The preceding sections explained the necessity for a universal, secure, and inclusive proof of personhood mechanism. Additionally, they discussed why iris biometrics appears to be the sole feasible path for such a PoP mechanism. The realization via the Orb and World ID has also been explained on a high level. The subsequent section dives deeper into the specifics of the architectural design and implementation of both the Orb and World ID.

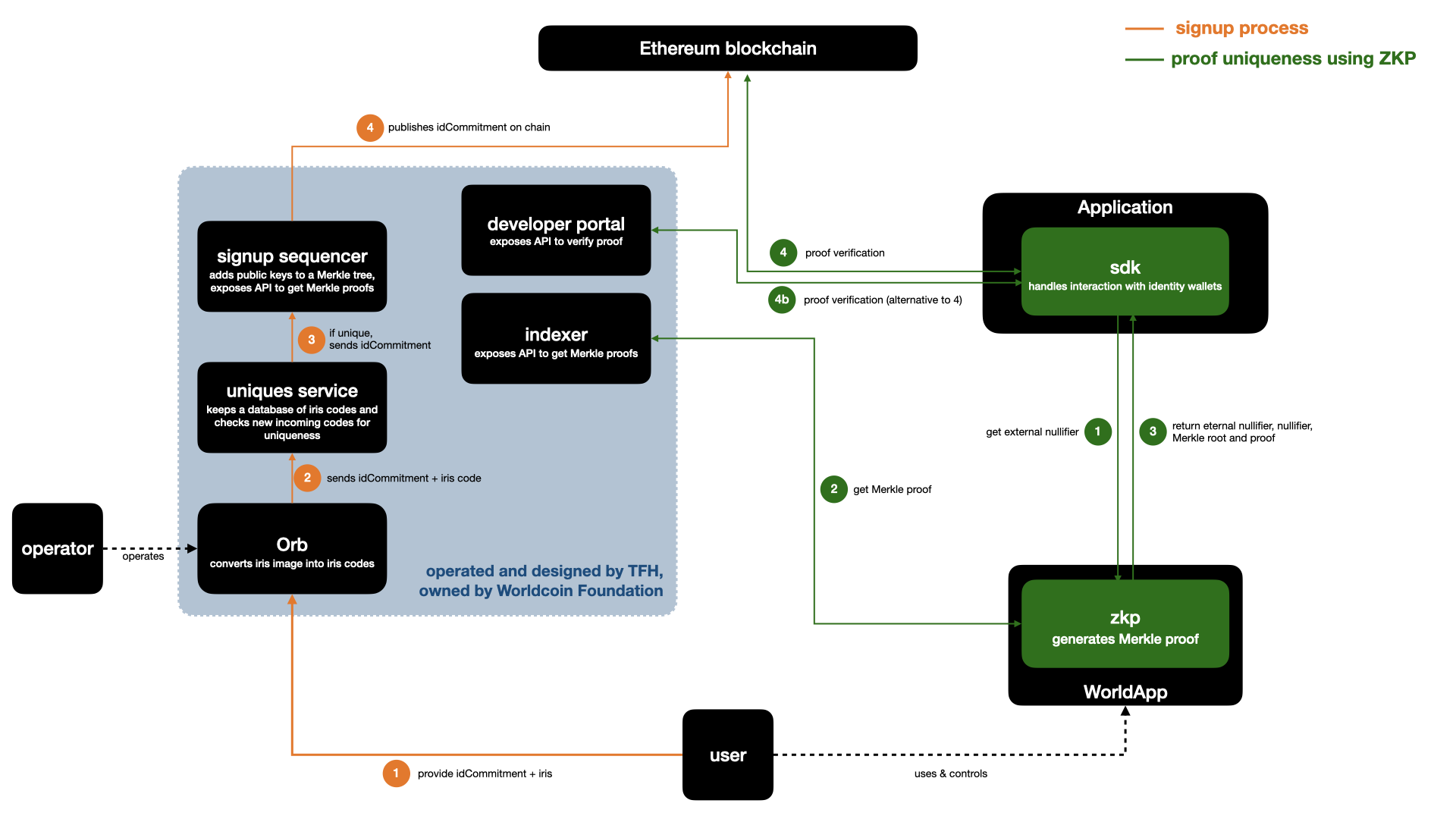

Architecture Overview

To get a World ID, an individual begins by downloading the World App. The app stores their World ID and enables them to use it across multiple platforms and services. The World App is user-friendly, particularly geared towards crypto beginners, and offers simple financial features based on decentralized finance: allowing users to on- and off-ramp, subject to the availability of providers, swap tokens through a decentralized exchange, and connect with dApps through WalletConnect. Importantly, the system allows other developers to create their own clients without seeking permission, meaning there can be various apps supporting World ID.

Once verified through the Orb, individuals are issued a World ID, a privacy-preserving proof-of-personhood credential. Through World ID, they can claim a set amount of WLD periodically (Worldcoin Grants), where laws allow. World ID can also be used to authenticate as human with other services (e.g., prevent user manipulation in the case of voting). In the future, other credentials can be issued on the Worldcoin Protocol as well.

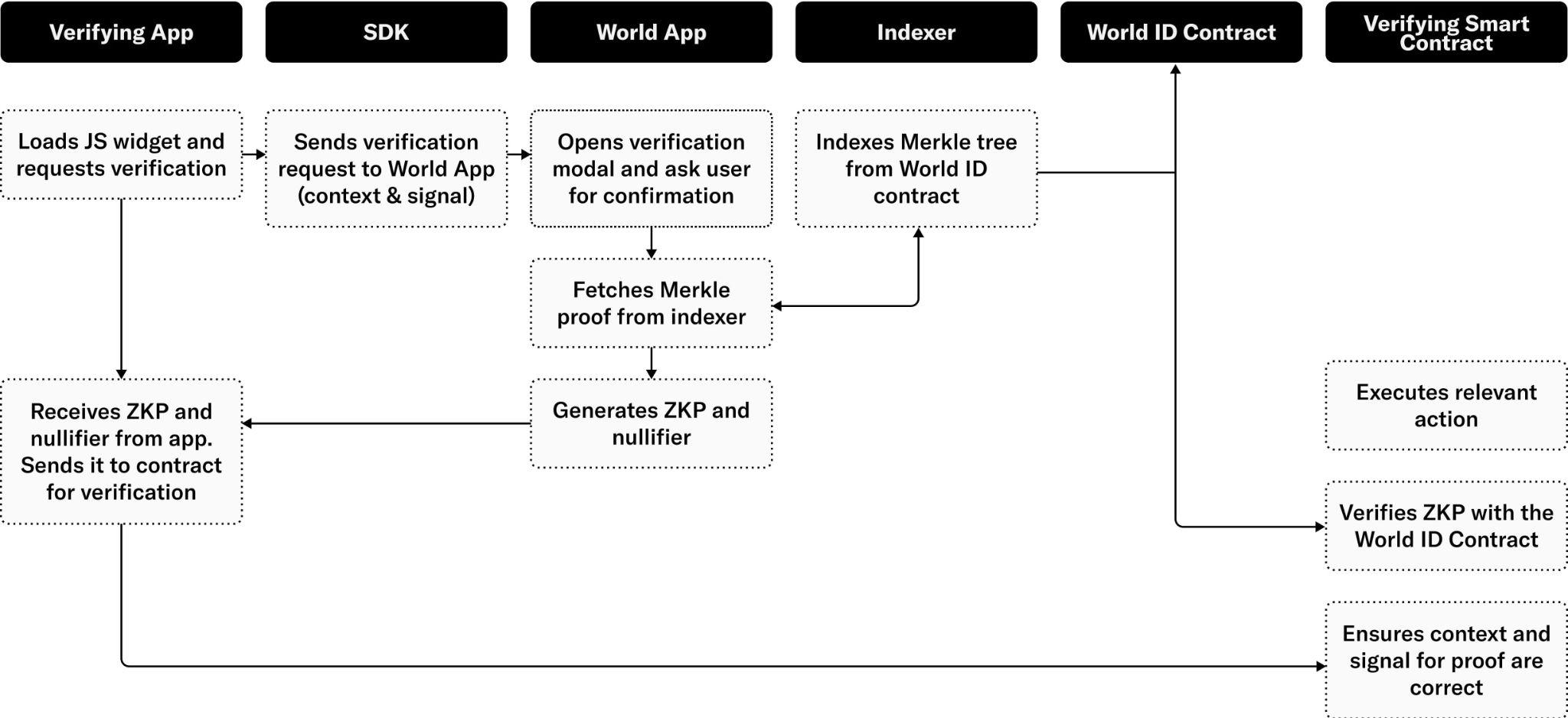

To make World ID and the Worldcoin Protocol easy to use, an open source Software Development Kit (SDK) is available to simplify interactions for both Web3 and Web2 applications. The World ID software development kit (SDK) is the set of tools, libraries, APIs, and documentation that accompanies the Protocol. Developers can use the SDK to leverage World ID in their applications. The SDK makes web, mobile, and on-chain integrations fast and simple; it includes tools like a web widget (JS), developer portal, development simulator, examples, and guides.

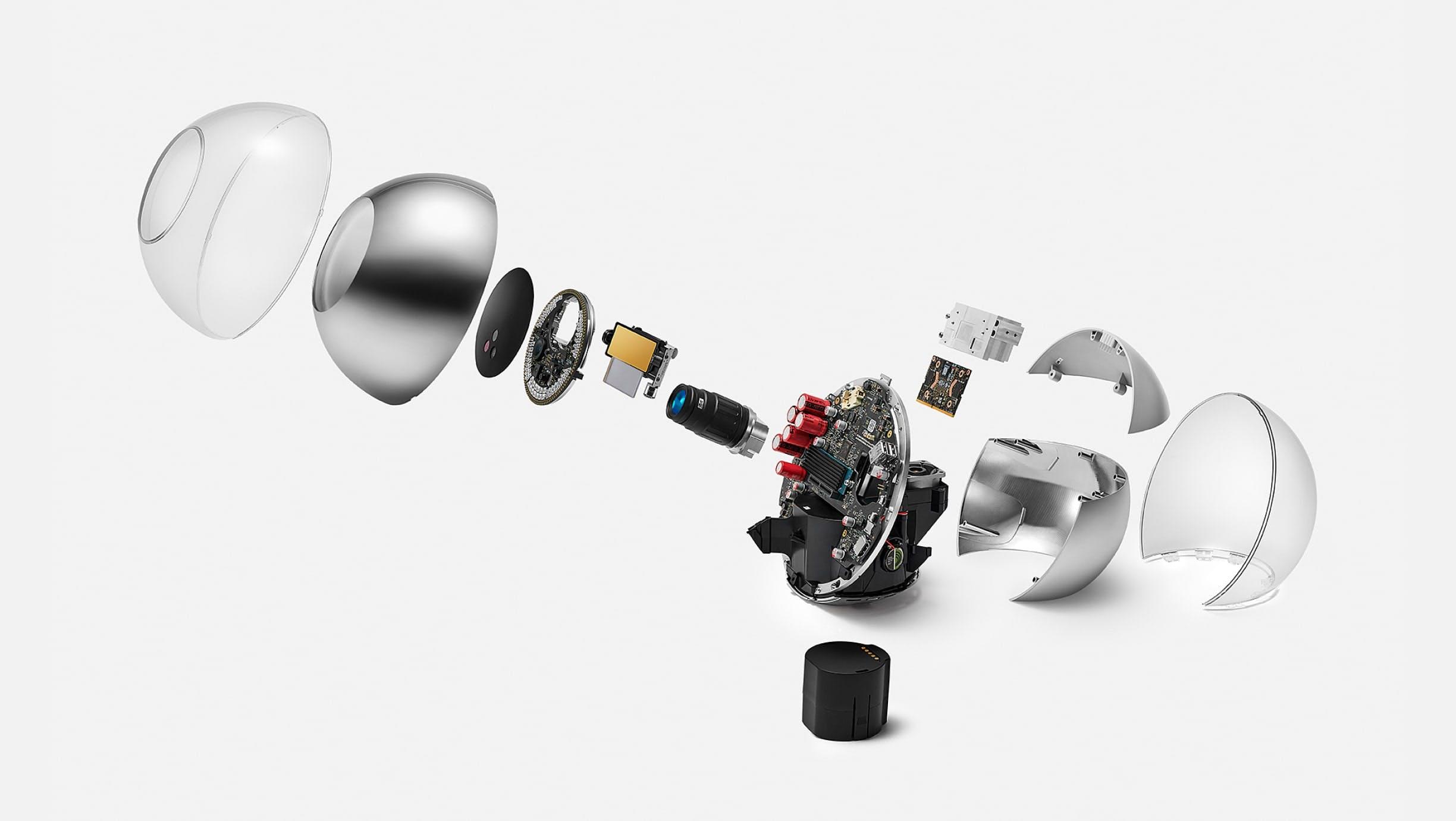

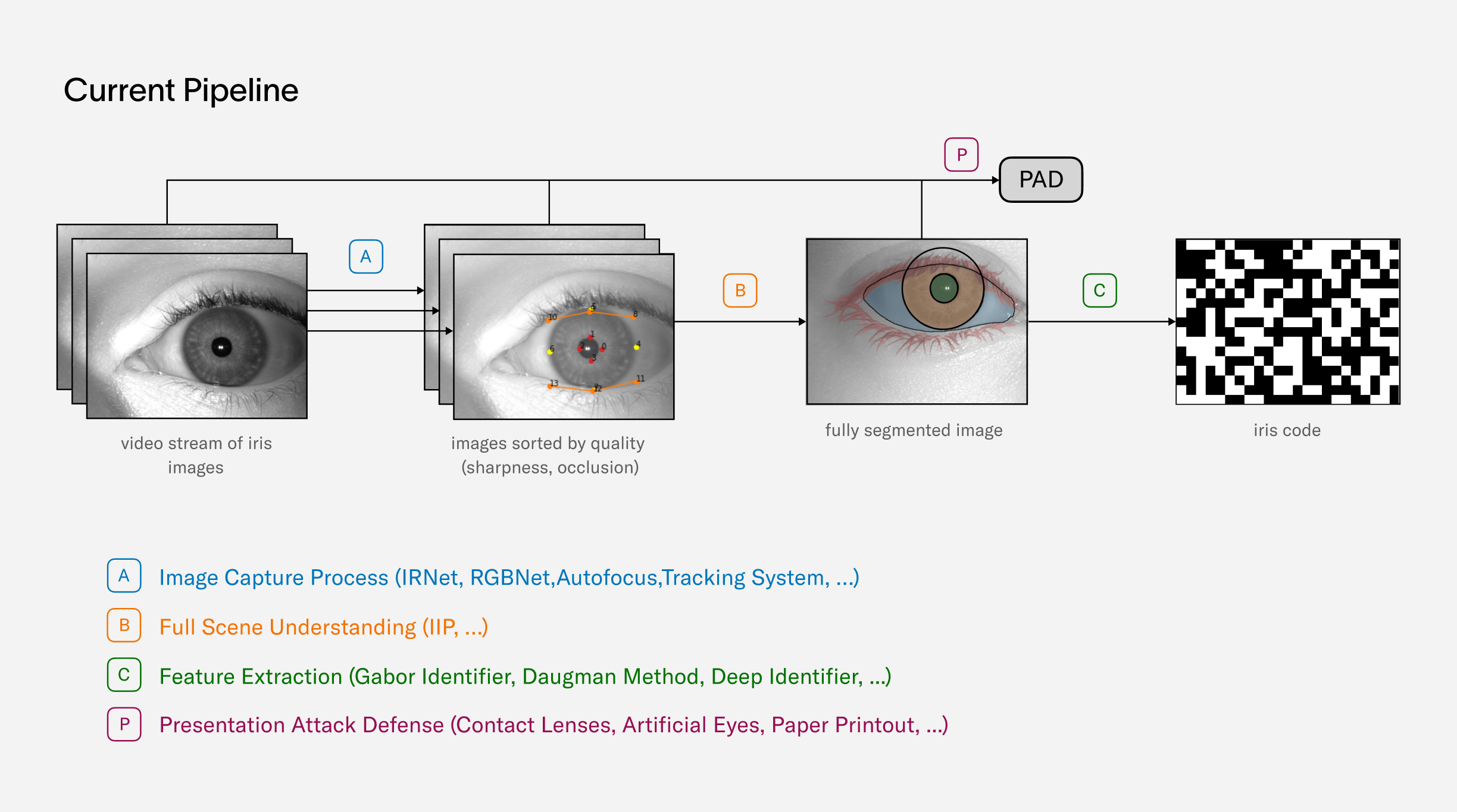

The Orb

Previous sections discussed why a custom hardware device using iris biometrics is the only approach to ensure inclusivity (i.e. everyone can sign up regardless of their location or background) and fraud resistance, promoting fairness for all participants. This section discusses the engineering details of the Orb, which was first prototyped and developed by Tools for Humanity.

Why Custom Hardware is Needed

It would have been significantly easier to use off the shelf available devices like smartphones or iris imaging devices. However, neither is suitable for uncontrolled and adversarial environments in the presence of significant incentives. To reliably distinguish people, only iris biometrics are suitable for this globally scalable use case . To enable maximum accuracy, device integrity, spoof prevention as well as privacy, a custom device is necessary. The reasoning is described in the following section.

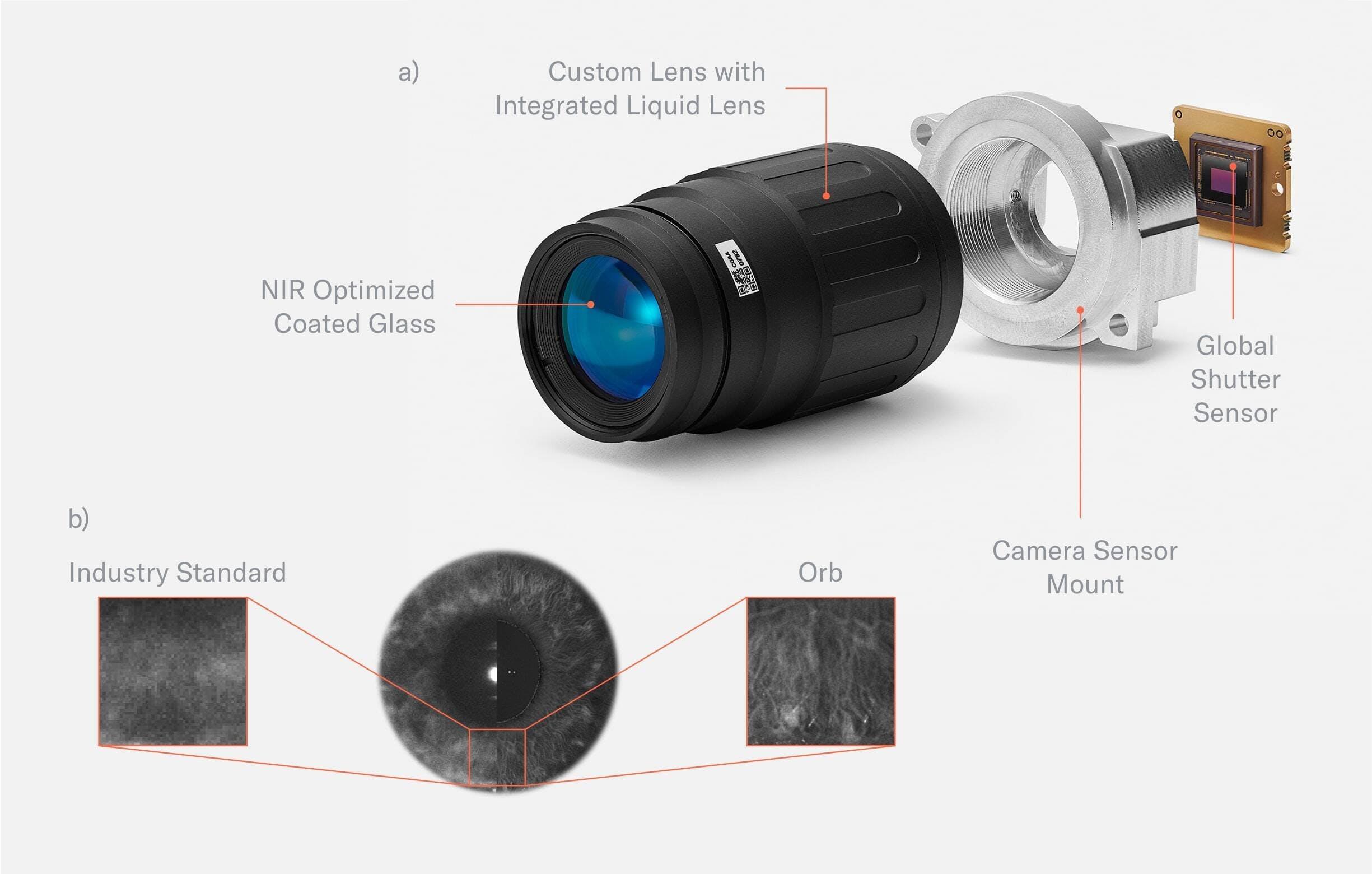

#In terms of the biometric verification itself, the fastest and most scalable path would be to use smartphones. However, there are several key challenges with this approach. First, smartphone cameras are insufficient for iris biometrics due to their low resolution across the iris, which decreases accuracy. Further, imaging in the visible spectrum can result in specular reflections on the lens covering the iris and low reflectivity of brown eyes (most of the population) introduces noise. The Orb captures high quality iris images with more than an order of magnitude higher resolution compared to iris recognition standards. This is enabled by a custom, narrow field-of-view camera system. Importantly, images are captured in the near infrared spectrum to reduce environmental influences like different light sources and specular reflections. More details on the Orb’s imaging system can be found in the following sections.

Second, the achievable security bar is very low. For PoP, the important part is not identification (i.e. “Is someone who they claim they are?”), but rather proving that someone has not verified yet (i.e. “Is this person already registered?”). A successful attack on a PoP system does not necessitate the attacker’s impersonation of an existing individual, which is a challenging requirement that would be needed to unlock someone's phone. It merely requires the attacker to look different from everyone who has registered so far. Phones and existing iris cameras are missing multi-angle and multi-spectral cameras as well as active illumination to detect so-called presentation attacks (i.e. spoof attempts) with high confidence. A widely-viewed video demonstrating an effective method for spoofing Samsung’s iris recognition illustrates how straightforward such an attack could be in the absence of capable hardware.

Further, a trusted execution environment would need to be established in order to ensure that verifications originated from legitimate devices (not emulators). While some smartphones contain dedicated hardware for performing such actions (e.g., the Secure Enclave on the iPhone, or the Titan M chip on the Pixel), most smartphones worldwide do not have the hardware necessary to verify the integrity of the execution environment. Without those security features, basically no security can be provided and spoofing the image capture as well as the enrollment request is straightforward for a capable attacker. This would allow anyone to generate an arbitrary number of synthetic verifications.

Similarly, no off-the-shelf hardware for iris recognition met the requirements that were necessary for a global proof of personhood. The main challenge is that the device needs to operate in untrusted environments which poses very different requirements than e.g. access control or border control where the device is operated in trusted environments by trusted personnel. This significantly increases the requirements for both spoof prevention as well as hardware and software security. Most devices lack multi-angle and multispectral imaging sensors for high confidence spoof detection. Further, to enable high security spoof detection, a significant amount of local compute on the device is needed, without the ability to intercept data transmission, which is not the case for most iris scanners. A custom device enables full control over the design. This includes tamper detection that can deactivate the device upon intrusion, firmware that is designed for security to make unauthorized access very difficult, as well as the possibility to update the firmware down to the bootloader via over the air updates. All iris codes generated by an Orb are signed by a secure element to make sure they originate from a legitimately provisioned Orb, instead of, for example, an attacker’s laptop. Further, the computing unit of the Orb is capable of running multiple real-time neural networks on the five camera streams (mentioned in the last section). This processing is used for real time image capture optimization as well as spoof detection. Additionally, this enables maximum privacy by processing all images on the device such that no iris images need to be stored by the verifier.

While no hardware system interacting with the physical world can achieve perfect security, the Orb is designed to set a high bar, particularly in defending against scalable attacks. The anti-fraud measures integrated into the Orb are constantly refined. Several teams at Tools for Humanity are continuously working on increasing the accuracy and sophistication of the liveness algorithms. An internal red team is probing various attack vectors. In the near future, the red teaming will extend to external collaborators including through a bug bounty program.

Lastly, the correlation between image quality and biometric accuracy is well established, and it is expected that deep learning will benefit even more from increased image quality. Given the goal of reducing error rates as much as possible to achieve maximum inclusivity, the image quality of most devices was insufficient.

Since commercially available iris imaging devices did not meet the image quality or security needs, Tools for Humanity dedicated several years to developing a custom biometric verification device (the Orb) to enable universal access to the global economy in the most inclusive manner possible.

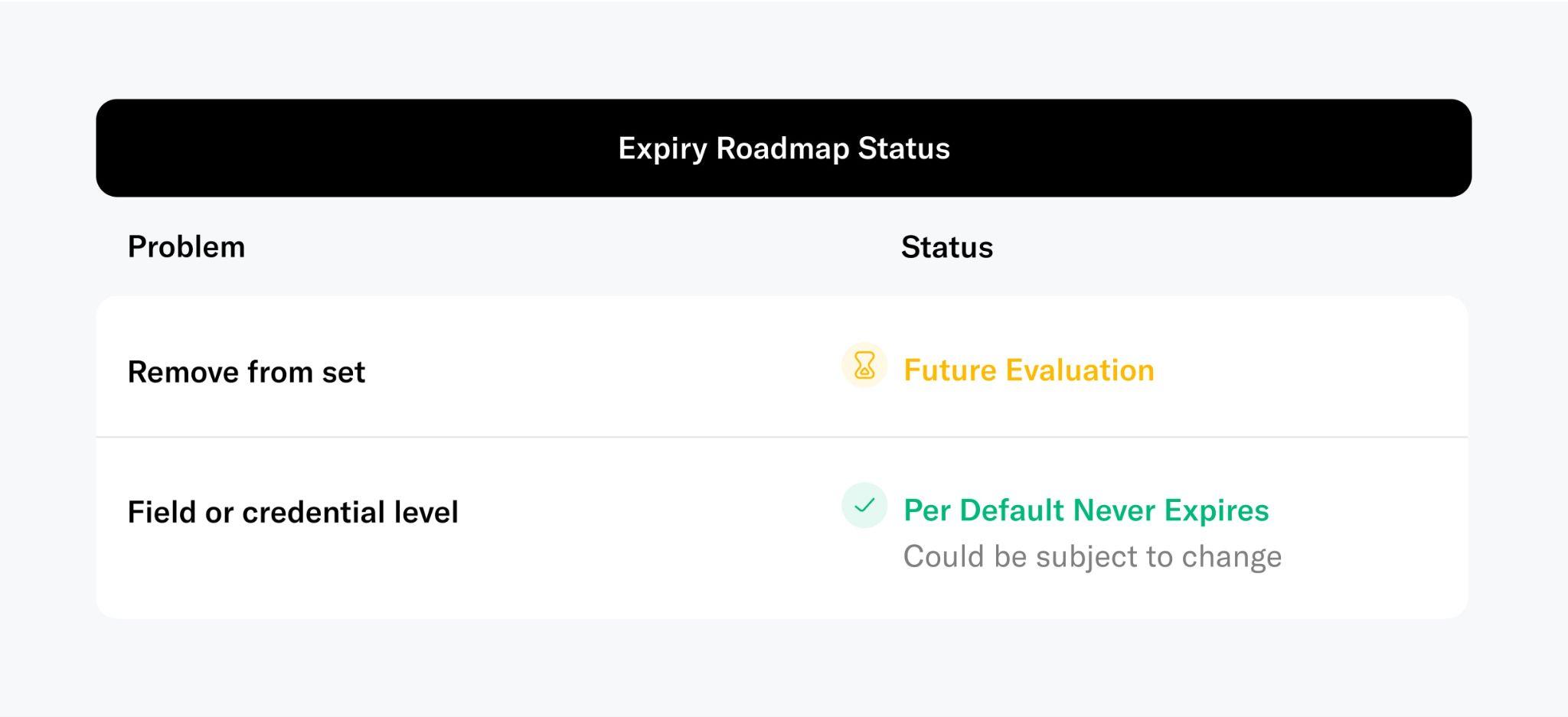

Hardware

Three years of R&D, including one year of small-scale field testing and one year of transition to manufacturing at scale, have led to the current version of the Orb, which is being open sourced. Feedback for design improvements is welcome and highly encouraged. The remainder of this section will go through a teardown of the Orb, with a few engineering anecdotes included.

Today’s Orb represents a precise balance of development speed, compactness, user experience, cost and at-scale production with minimal compromise being made on imaging quality and security. There will likely be future versions that are optimized even further both by Tools for Humanity and other companies as the Worldcoin ecosystem decentralizes. However, the current version represents a key milestone that enables scaling the Worldcoin project.

The following takes the reader through some of the most important engineering details of the Orb, as well as how the imaging system works. For security purposes, only tamper detection mechanisms that are meant to catch intrusion attempts are left out.

Design

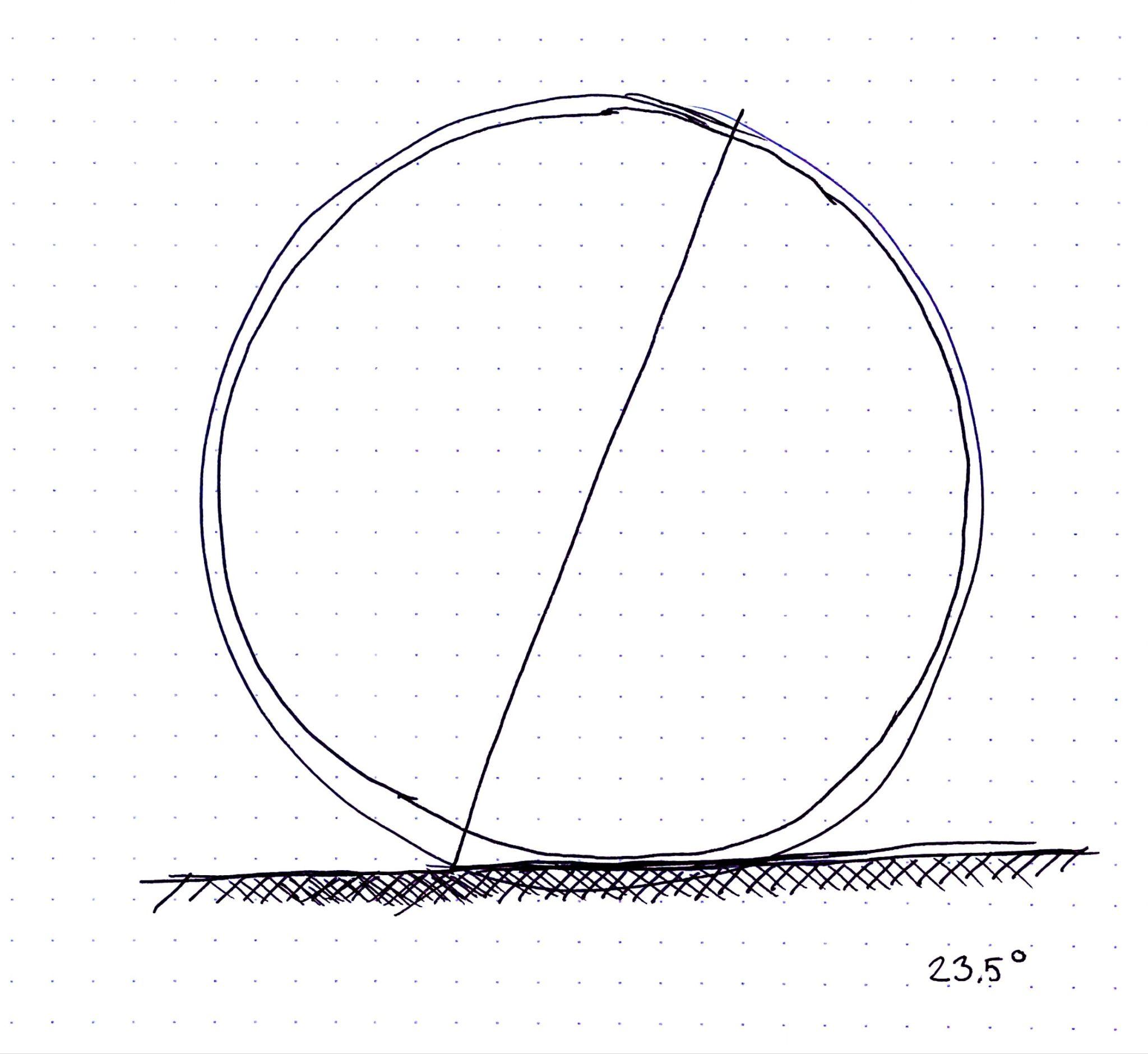

Fundamental to the development of the Orb was its design. A spherical shape is an engineering challenge. However, it was important for the design of the Orb to reflect the values of the Worldcoin project. The spherical shape stands for Earth, which is home to all. Similarly the Orb is tilted at 23.5 degrees, the same degree at which the Earth is tilted relative to its orbital plane around the sun. There’s even a 2mm thick clear shell on the outside of the Orb which protects the Orb just like the atmosphere protects Earth. The resemblance of Earth symbolizes that the Worldcoin project is meant to give everyone the opportunity to participate, regardless of their background and the Orb and its use of biometrics is a reflection of that since nothing is required other than being human.

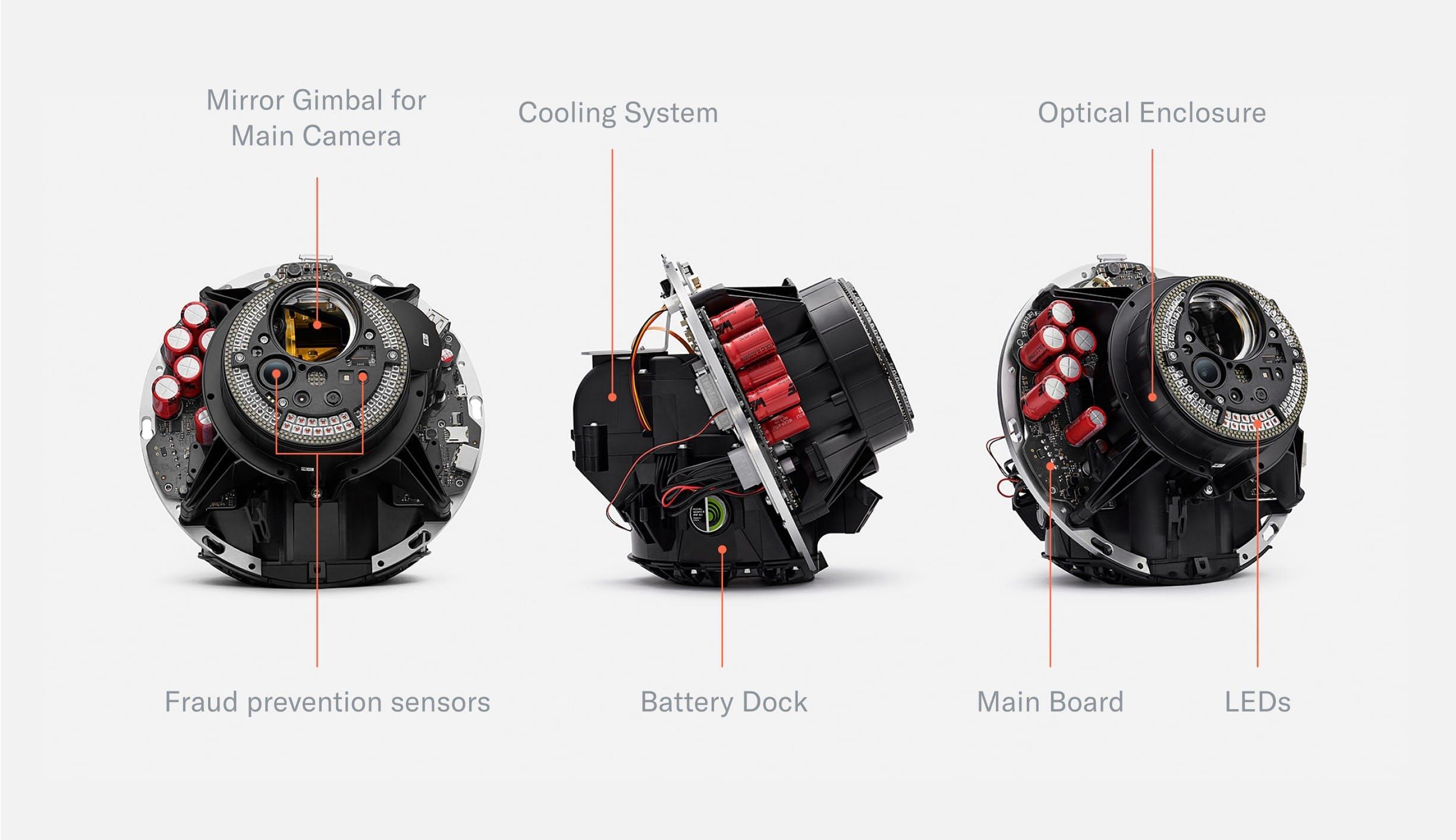

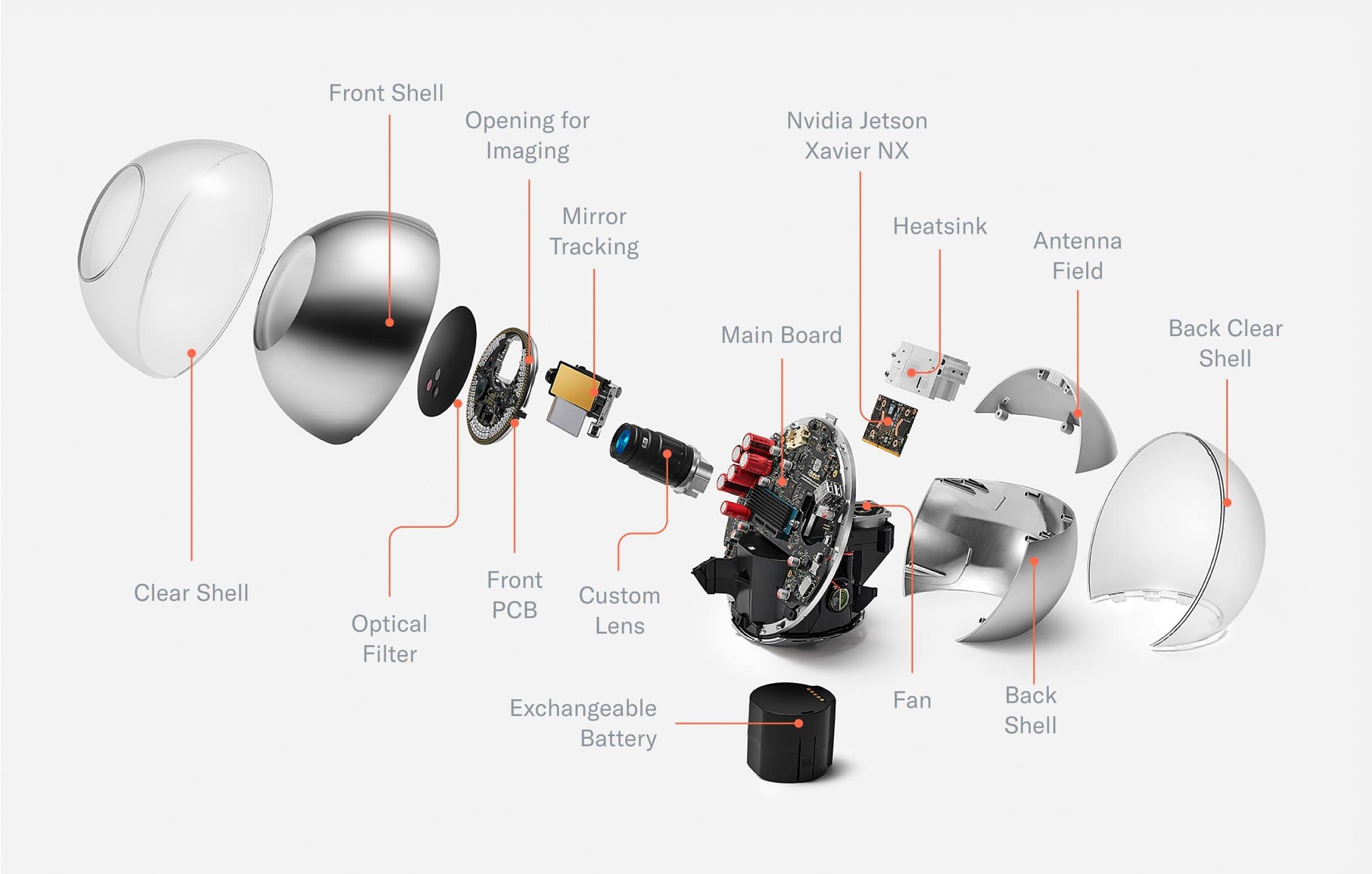

Mechanics

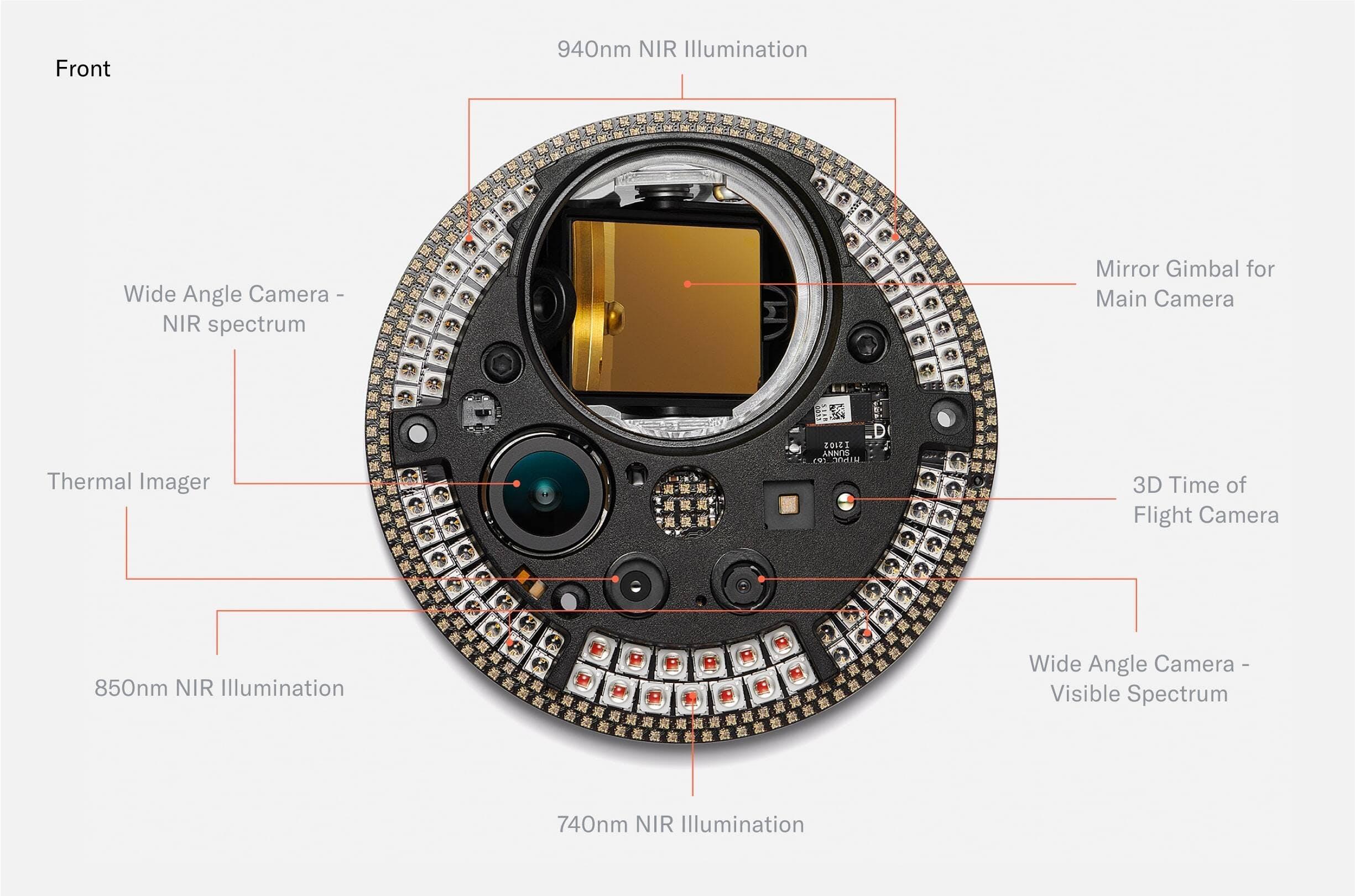

When removing the shell, the mainboard, optical system and cooling system become visible. Most of the optical system is hidden in an enclosure that, together with the shell, forms a dust- and water-resistant environment to enable long-term use even in challenging environments.

The Orb consists of two hemispheres separated by the mainboard which is tilted at 23.5°—the angle of the rotational axis of the earth. The mainboard holds a powerful computing unit to enable local processing for maximum privacy. The frontal half of the Orb is dedicated to the sealed optical system. The optical system consists of several multispectral senso$rs to verify liveness and a 2D gimbal-enabled narrow field of view camera to capture high resolution iris images. The other hemisphere is dedicated to the cooling system as well as speakers. An exchangeable battery can be inserted from the bottom to enable uninterrupted operation in a mobile setting.

Once the shell is removed, the Orb can be divided into four core parts:

- Front: The optical system

- Middle: The mainboard separates the device into two hemispheres

- Back: The main computing unit as well as the active cooling system

- Bottom: An exchangeable battery

With the housing material removed (e.g. the dust-proof enclosure of the optical system), all relevant components of the Orb become visible. This includes the custom lens, which is optimized for both near infrared imaging and fast, durable autofocus. The front of the optical system is sealed by an optical filter to keep dust out and minimize noise from the visible spectrum to optimize image quality. In the back, a plastic component in the otherwise chrome shell allows for optimized antenna placement. The chrome shell is covered by a clear shell to avoid deterioration of the coating over time.

First prototypes were tested outside the lab as early as possible. Naturally, this taught the team many lessons, including:

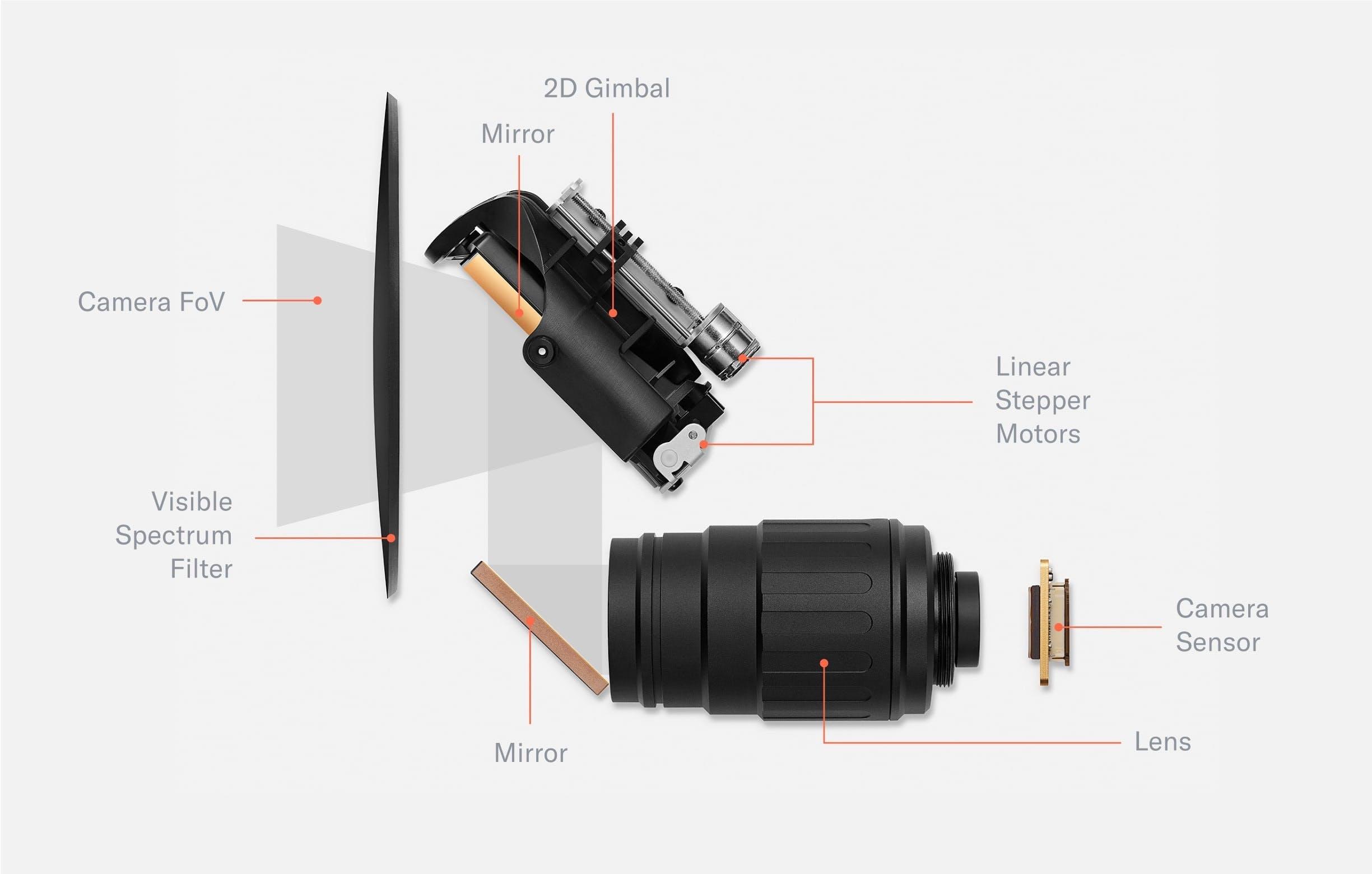

Optical System

With the first prototype, the signup experience was notoriously difficult. Over the course of a year the optical system was upgraded with autofocus and eye tracking such that alignment becomes trivial when the person is within an arm's length of the Orb.

Battery

No off-the-shelf battery would last for a full day on a single charge. A custom exchangeable battery was designed based on 18650 Li-Ion cells—the same form factor as the cells used in modern electric cars. The battery consists of 8 cells with 3.7V nominal voltage in a 4S2P configuration (14.8V) with a capacity of close to 100Wh, which is a limit imposed by regulations related to logistics. Now there’s no limit to Orb uptime.

The Orb’s custom battery is made of Li-Ion 18650 cells (the same cells used in many electric cars). With close to 100Wh, the capacity is optimized for battery lifetime while complying with transportation regulations. A USB-C connector makes recharging convenient.

Shell

The coating of the shell sometimes deteriorated in the handheld use case. Therefore, a 2mm clear shell was added to both optimize the design as well as protect the chrome coating from scratches and other wear.

UX LEDs

To make the user experience more intuitive, especially in loud environments where a person might not be able to hear sound feedback, an LED ring was added to help guide people through the sign-up process. Similarly, status LEDs were exposed next to the only button on the Orb to indicate its current state.

Optical System

Early field tests showed that the verification experience needed to be even simpler than anticipated. To do this, the team first experimented with many approaches featuring mirrors that allowed people to use their reflection to align with the Orbs imaging system. However, designs that worked well in the lab quickly broke down in the real world. The team ended up building a two-camera system featuring a wide angle camera and a telephoto camera with an adjustable ~5° field of view by means of a 2D gimbal. This increased the spatial volume in which a signup can be successfully completed by several orders of magnitude, from a tiny box of 20x10x5mm for each eye to a large cone.

The main imaging system of the Orb consists of a telephoto lens and 2D gimbal mirror system, a global shutter camera sensor and an optical filter. The movable mirror increases the field of view of the camera system by more than two orders of magnitude. The optical unit is sealed by a black, visible spectrum filter which seals the high precision optics from dust and only transmits near infrared light. The image capture process is controlled by several neural networks.

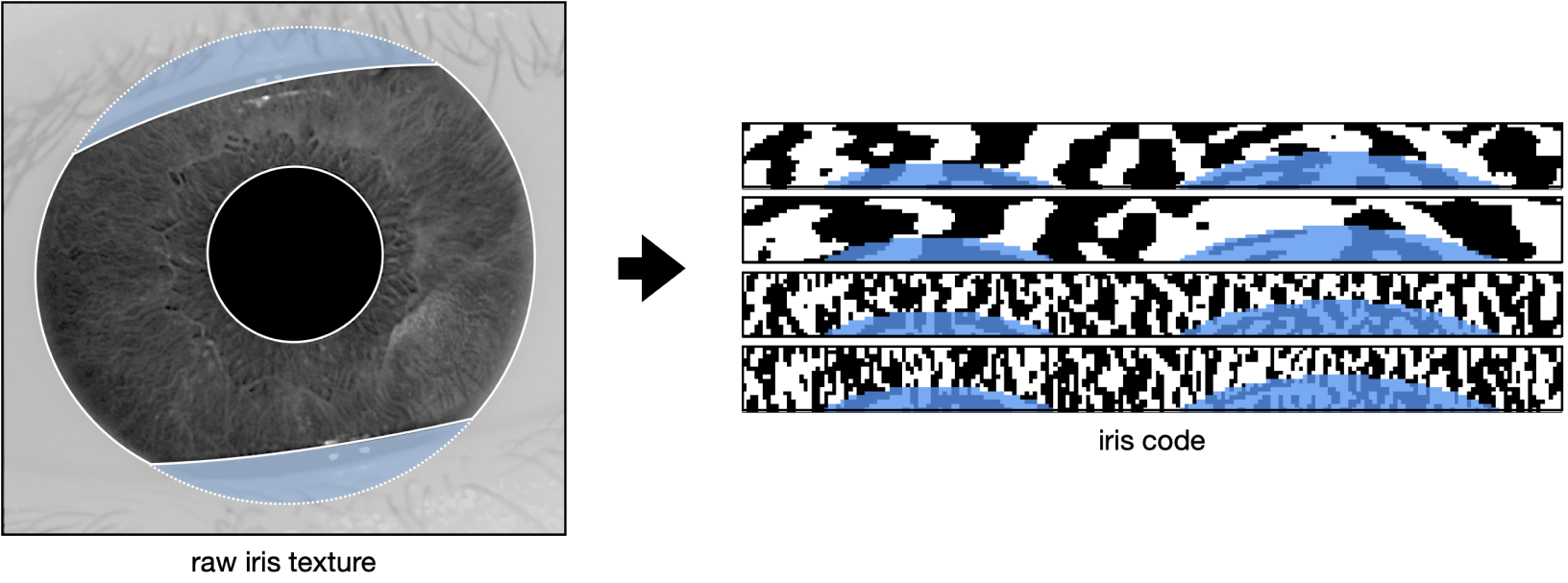

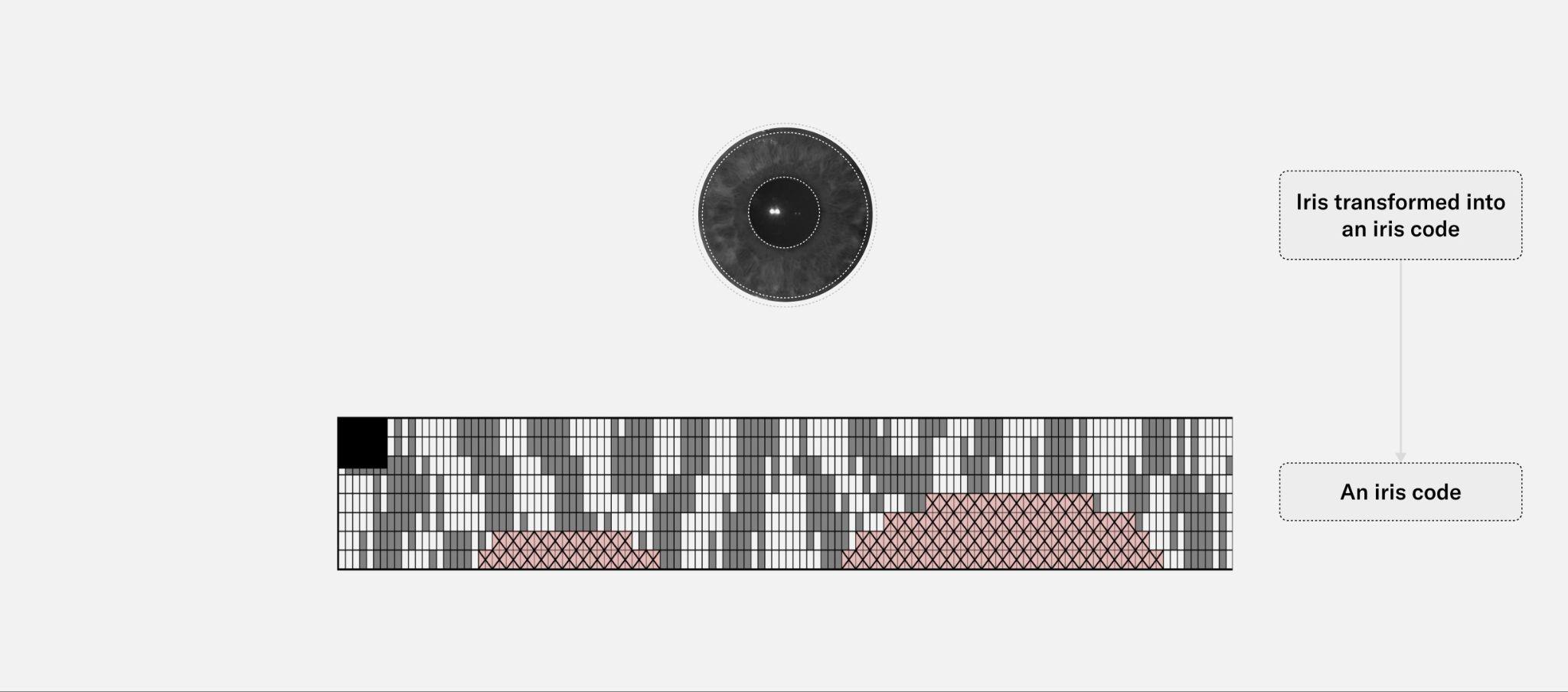

The wide angle camera captures the scene, and a neural network predicts the location of both eyes. Through geometrical inference, the field of view of the telephoto camera is steered to the location of an eye to capture a high resolution image of the iris, which is further processed by the Orb into an iris code.

Beyond simplicity, the image quality was the main focus. The correlation between image quality and biometric accuracy is well established.

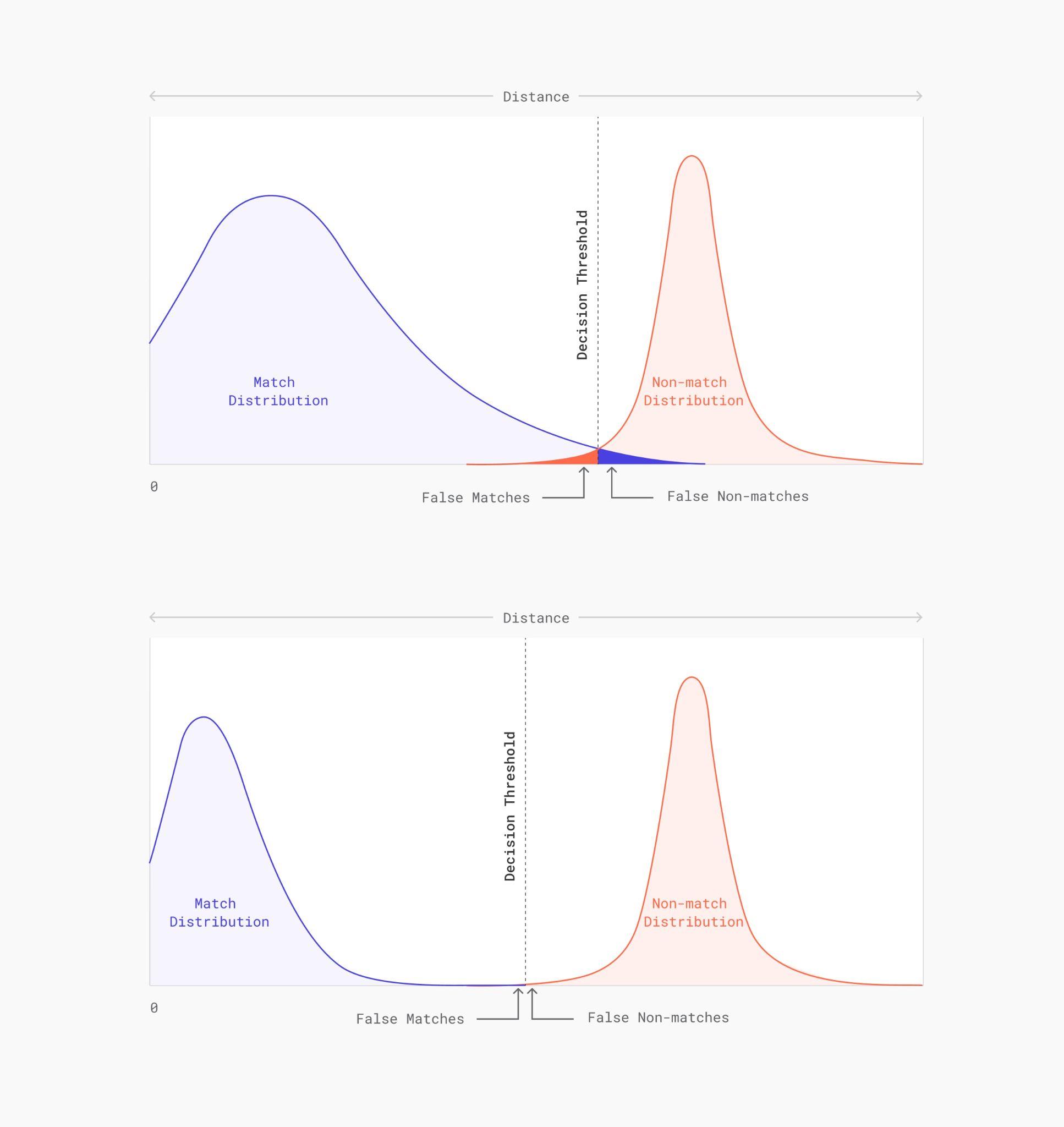

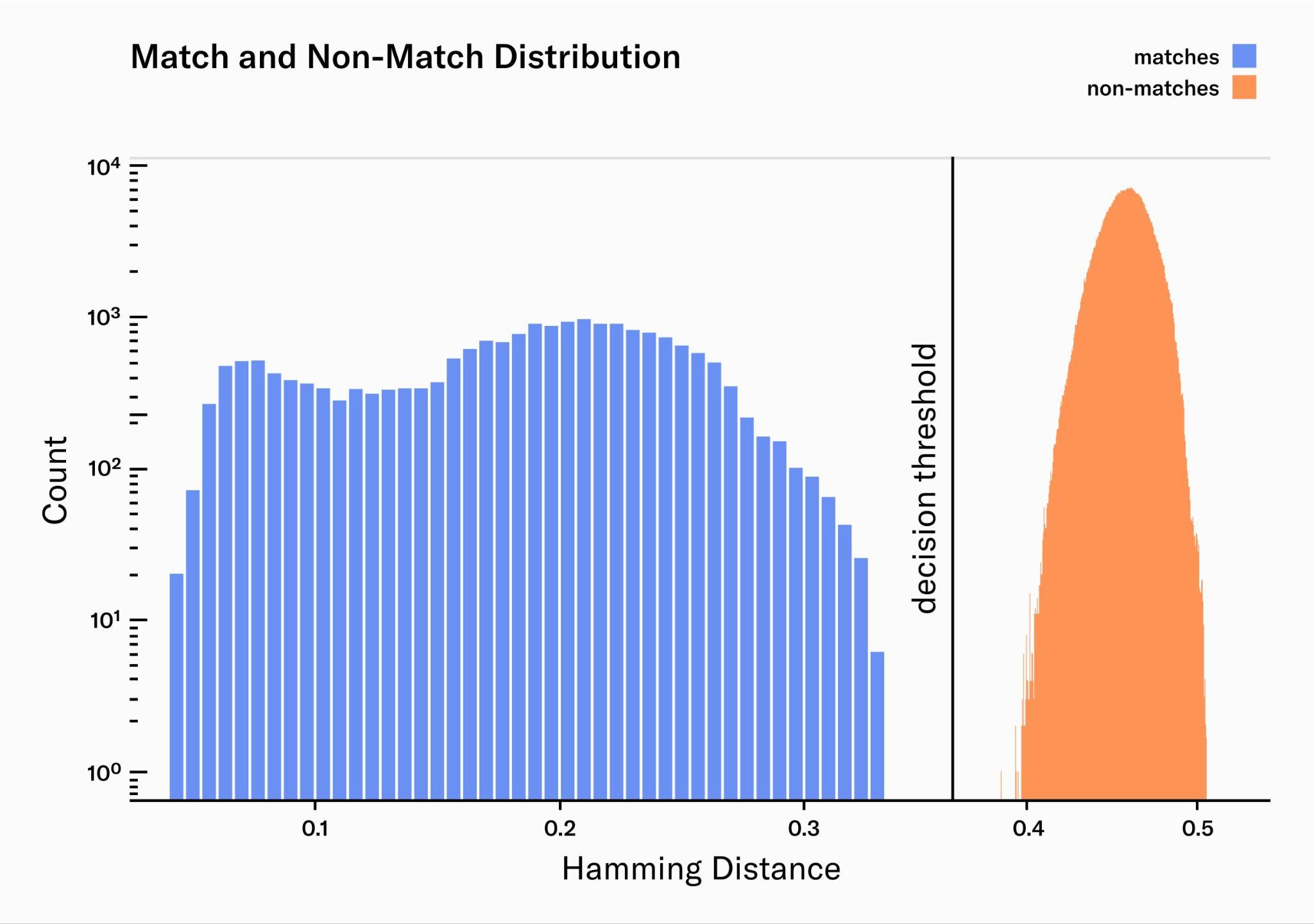

Here pairwise comparisons are plotted: the match distribution for pairs of the same identity (blue) and non-match distribution for pairs of different identity (red). In a perfect system, the match-distribution would be a very narrow peak at zero. However, multiple sources of error widen the distribution, leading to more overlap with the non-match distribution and therefore increasing False Match and False Non-Match rates. High quality image acquisition narrows the match-distribution significantly and therefore minimizes errors. The width of the non-match distribution is determined by the amount of information that is captured by the biometric algorithm: the more information is encoded in the embeddings the narrower the distribution.

Many off-the-shelf products have been tested but there wasn’t any lens compact enough to meet the imaging requirements while still being affordable. Therefore, the team partnered with a well known specialist in the machine vision industry to build a customized lens. The lens is optimized for the near infrared spectrum and has an integrated custom liquid lens which allows for neural network controlled millisecond-autofocus. It is paired with a global shutter sensor to capture high resolution, distortion free images.

Fig. 3.10:

a) Custom telephoto lens. The telephoto lens was custom designed for the Orb. The glass is coated to optimize image capture in the near infrared spectrum. An integrated liquid lens allows for durable millisecond autofocus. The position of the liquid lens is controlled by a neural network to optimize focus. To capture images free of motion blur, the global shutter sensor is synchronized with pulsed illumination.

b) A comparison of the image quality of the Worldcoin Orb vs. the industry standard clearly show the advancements made in the space. The camera and the corresponding pulsed infrared illumination are synchronized to minimize motion blur and suppress the influence of sunlight. This way, the Orb creates lab environment conditions for imaging, no matter its location. Needless to say, the infrared illumination is compliant with eye safe standards (such as EN 62471:2008).

Image quality was the one thing never compromised no matter how difficult it was. In terms of resolution the Orb is orders of magnitude above the industry standard. This provides the basis for the lowest error rates possible to, in turn, maximize the inclusivity of the system.

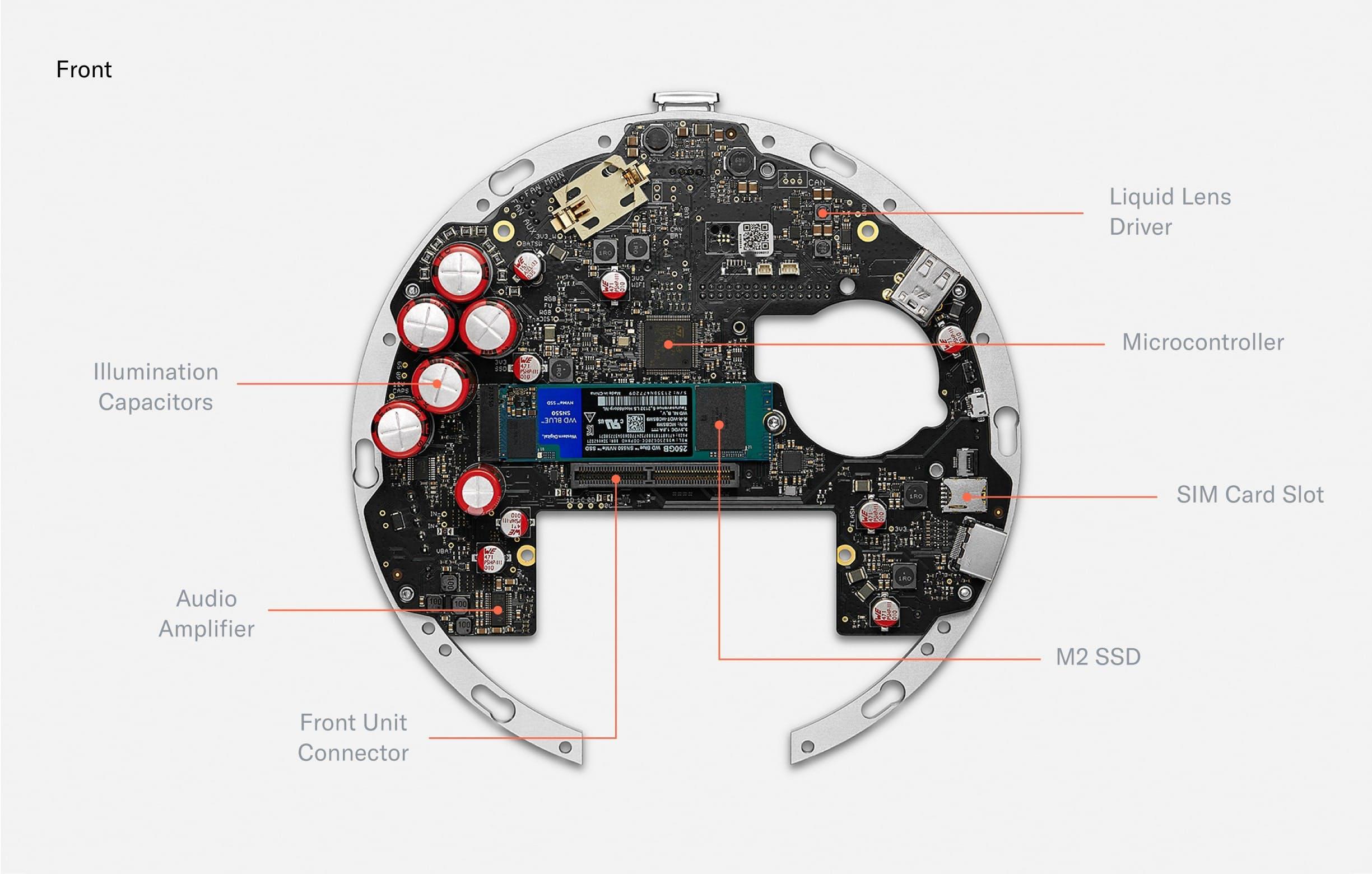

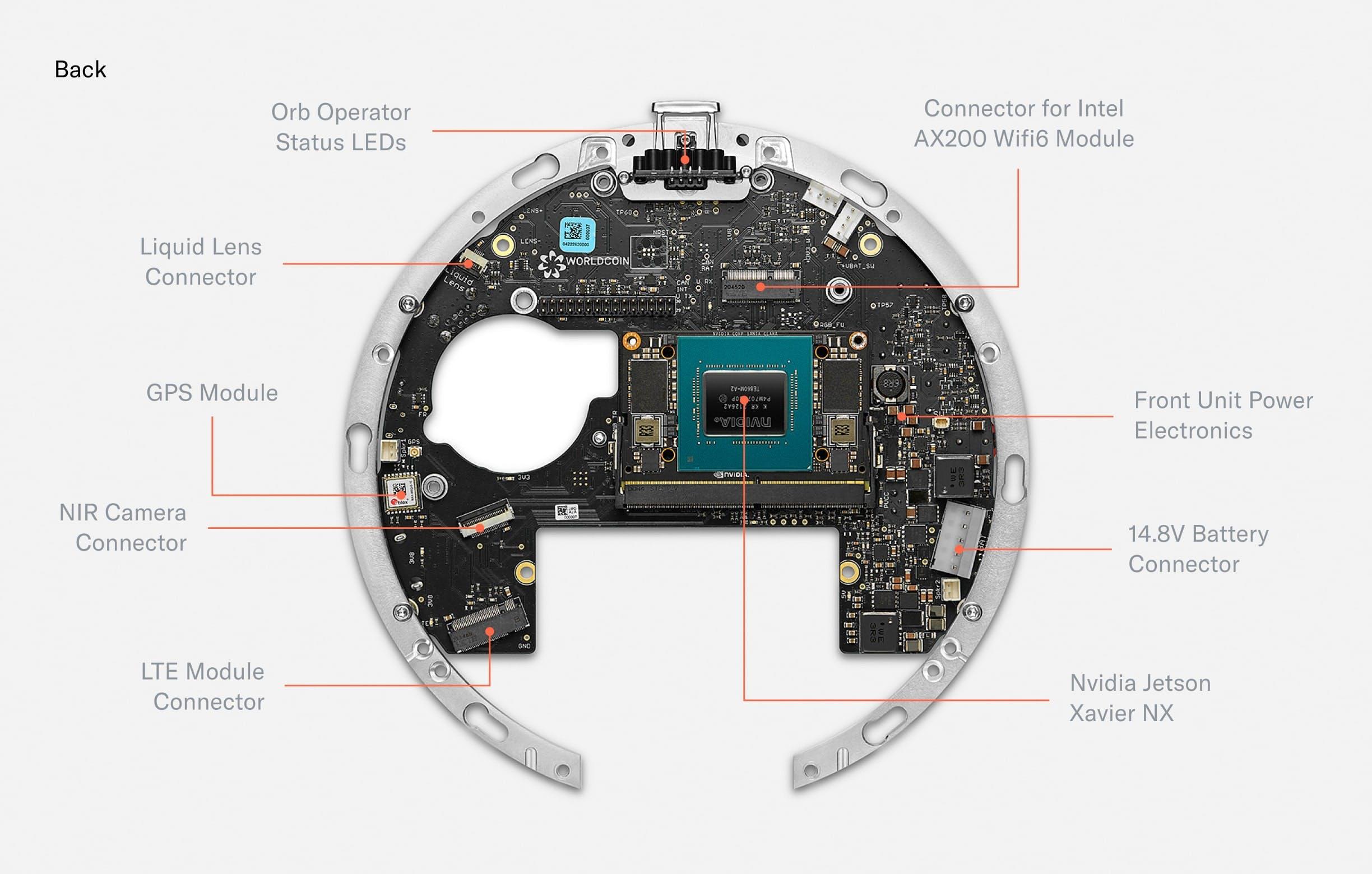

Electronics

When disassembling the Orb further, several PCBs (Printed Circuit Boards) are visible, including the front PCB containing all illumination, the security PCB for intrusion detection and the bridge PCB which connects the front PCB with the largest PCB: the mainboard.

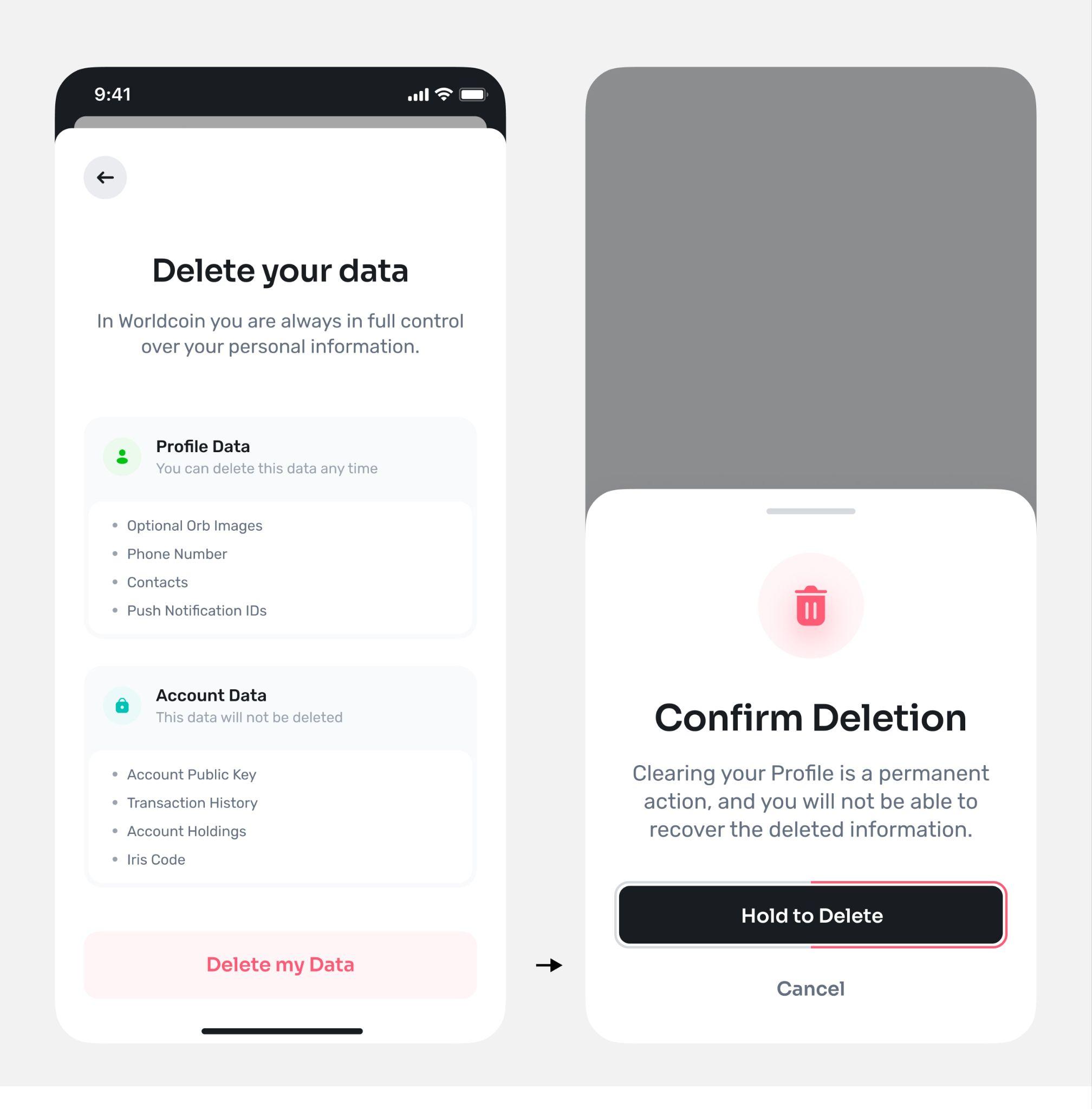

The front of the mainboard holds capacitors to power the pulsed, near infrared illumination (certified eye safe). There are also drivers to power the deformation of the liquid lens in the optical system. A microcontroller controls precise timing of the peripherals. An encrypted M.2 SSD can be used to store images for voluntary data custody and image data collection. Those images are secured by a second layer of asymmetric encryption such that the Orb can only encrypt, but cannot decrypt. The contribution of data is optional and data deletion can be requested at any point in time through the World App. A SIM card slot enables optional LTE connectivity.

Fig. 3.

The back of the mainboard holds several connectors for active elements of the optical system. Additionally, a GPS module enables precise location of Orbs for fraud prevention purposes. A Wi-Fi Module equips the Orb with the possibility to upload iris codes to make sure every person can only sign up once. Finally, the mainboard hosts a Nvidia Jetson Xavier NX which runs multiple neural networks in real time to optimize image capture, perform local anti-spoof detection and calculate the iris code locally to maximize privacy.

The mainboard acts as a custom carrier board for the Nvidia Jetson Xavier NX SoM which is the main computing unit powering the Orb. The Jetson is capable of running multiple neural networks on several camera streams in real-time to optimize image capture (autofocus, gimbal positioning, illumination, quality checks i.e. “is_eye_open”) and perform spoof detection. To optimize for privacy, images are fully processed on the device, and are only stored by Tools for Humanity if the user gives explicit consent to help improve the system.

Apart from the Jetson, the other major “plugged-in” component is a 250GB M.2 SSD. The encrypted SSD can be used to buffer images for voluntary data contribution. Images are protected by a second layer of asymmetric encryption such that the Orb can only encrypt, but cannot decrypt. The contribution of data is optional and data deletion can be requested at any point in time through the app.

Further, a STM32 microcontroller controls time-critical peripherals, sequences power, and boots the Jetson. The Orb is equipped with Wi-Fi 6 and a GPS module to locate the Orb and prevent misuse. Finally, a 12 bit liquid lens driver allows for controlling the focus plane of the telephoto lens with a precision of 0.4mm.

The most densely packed PCB of the Orb is the front PCB. It mainly consists of LEDs. The outermost RGB LEDs power the “UX LED ring.” Further inside, there are 79 near infrared LEDs of different wavelengths. The Orb uses 740nm, 850nm and 940nm LEDs to capture a multispectral image of the iris to make the uniqueness algorithm more accurate and detect spoofing attempts.

The front PCB also hosts several multispectral imaging sensors. The most basic one is the wide angle camera, which is used for steering the telephoto iris camera. Since every human can only receive one proof of personhood and Worldcoin is giving away a free share of Worldcoin to every person who chooses to verify with the Orb, the incentives for fraud are high. Therefore, further imaging sensors for fraud prevention purposes were added.

When designing the fraud prevention system, the team started from first principle reasoning: which measurable features do humans have? From there, the team experimented with many different sensors and eventually converged to a set that includes a near infrared wide angle camera, a 3D time of flight camera and a thermal camera. Importantly, the system was designed to enable maximum privacy. The computing unit of the Orb is capable of running several AI algorithms in real time which distinguish spoofing attempts from genuine humans based on the input from those sensors locally. No images are stored unless users give explicit consent to help improve the system for everyone.

Biometrics

Following the exploration of iris biometrics as a choice of modality, this section provides a detailed look into the process of iris recognition from image capture to the uniqueness check:

- Biometric Performance at a Billion People Scale, addresses the scalability of iris recognition technology. It discusses the potential of this biometric modality to establish uniqueness among billions of humans, examines various operating modes and anticipated error rates and ultimately concludes the feasibility of using iris recognition at a global scale.

- Iris Feature Generation with Gabor Wavelets introduces the use of Gabor filtering for generating unique iris features, explaining the scientific principles behind this traditional method which is fundamental to understanding how iris recognition works.

- Iris Inference System explores the practical application of the previously discussed principles. This section describes the uniqueness algorithm and explains how it processes iris images to ensure accurate and scalable verification of uniqueness. This provides a comprehensive overview of the system's operation, demonstrating how theoretical principles translate into practical application.

Collectively, these sections offer a holistic overview of iris recognition, from the core scientific principles to their practical application in the Orb.

Biometric performance at a billion people scale

In order to get a rough estimation on the required performance and accuracy of a biometric algorithm operating on a billion people scale, assume a scenario with a fixed biometric model, i.e. it is never updated such that its performance values stay constant.

Failure Cases

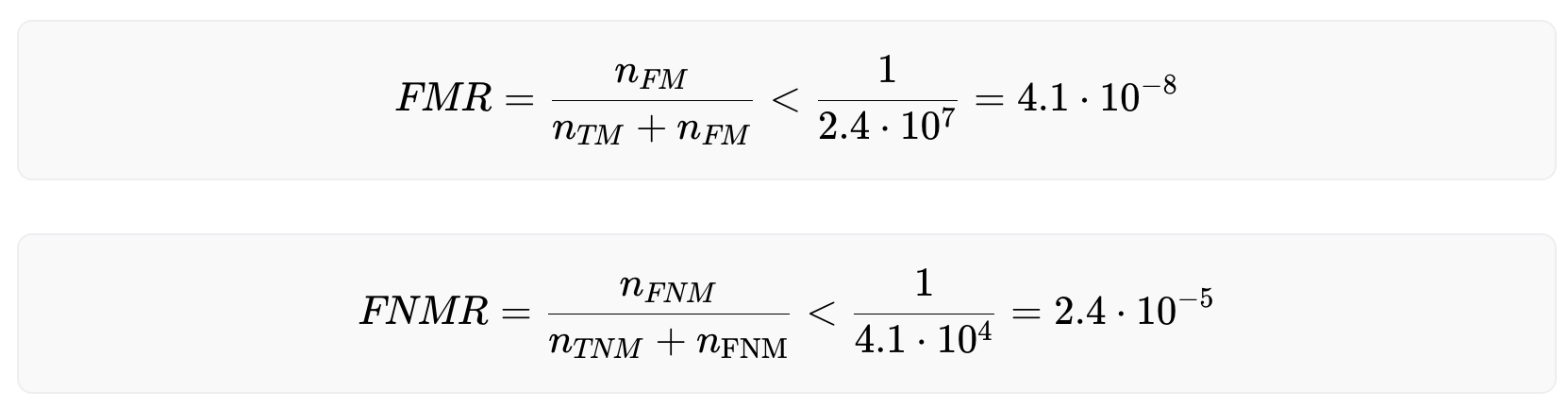

A biometric algorithm can fail in two ways: It can either identify a person as a different person, which is called a false match or it can fail to re-identify a person although this person is already enrolled, which is called a false non match. The corresponding rates - the false match rate (FMR) and the false non match rate (FNMR) - are the two critical KPIs for any biometric system.

For the purposes of this analysis, consider three different systems with varying levels of performance.

- One of the systems, as reported by John Daugman in his paper, demonstrates a false match rate of at a false non-match rate of 0.00014.

- Another system, represented by one of the leading iris recognition algorithms from NEC, has performance values as reported in the IREX IX report and IREX X leaderboard from the National Institute for Standards and Technology (NIST). These values include a false match rate of at a false non-match rate of 0.045.

- The third system, conceived during the early ideation stage of the Worldcoin project, represents a conservative estimate of how well iris recognition could perform outside of the lab environment i.e. in an uncontrolled, outdoor setting. Despite these constraints, it anticipated a false match rate of and a false non-match rate of 0.005. While not ideal, it demonstrated that iris recognition was the most viable path for a global proof of personhood.

A more in-depth examination of how these values are obtained from various sources is also available.

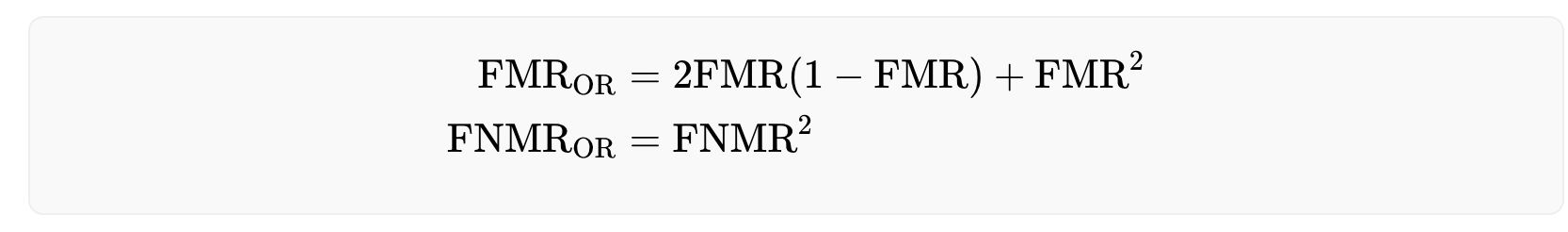

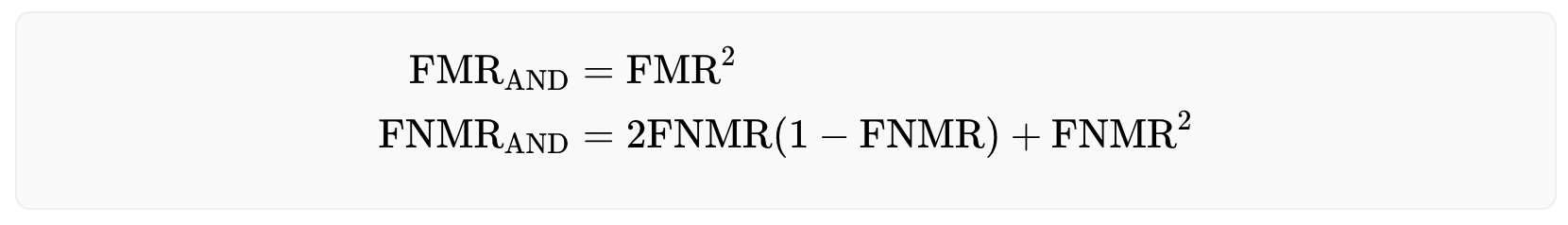

Effective Dual Eye Performance

The values mentioned above pertain to single eye performance, which is determined by evaluating a collection of genuine and imposter iris pairs. However, utilizing both eyes can significantly enhance the performance of a biometric system. There are various methods for combining information from both eyes, and to evaluate their performance, consider two extreme cases:

- The AND-rule, in which a user is deemed to match only if their irises match on both eyes.

- The OR-rule, in which a user is considered a match if their iris on one eye matches that of another user's iris on the same eye.

The OR-rule offers a safer approach as it requires only a single iris match to identify a registered user, thus minimizing the risk of falsely accepting the same person twice. Formally, the OR-rule reduces the false non-match rate while increasing the false match rate. However, as the number of registered users increases over time, this strategy may make it increasingly difficult for legitimate users to enroll to the system due to the high false match rate. The effective rates are given below:

On the other hand, the AND-rule allows for a larger user base, but comes at the cost of less security, as the false match rate decreases and the false non-match rate increases. The performance rates for this approach are as follows:

False Matches

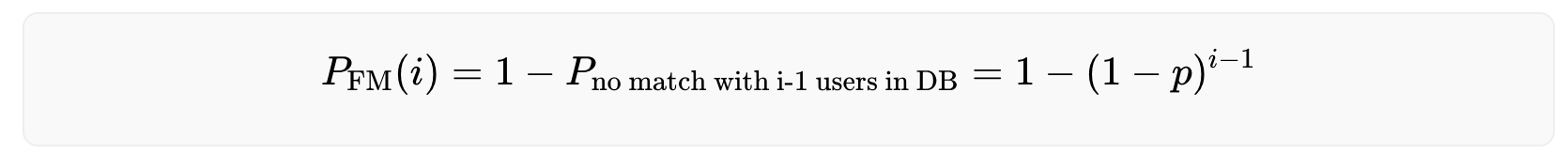

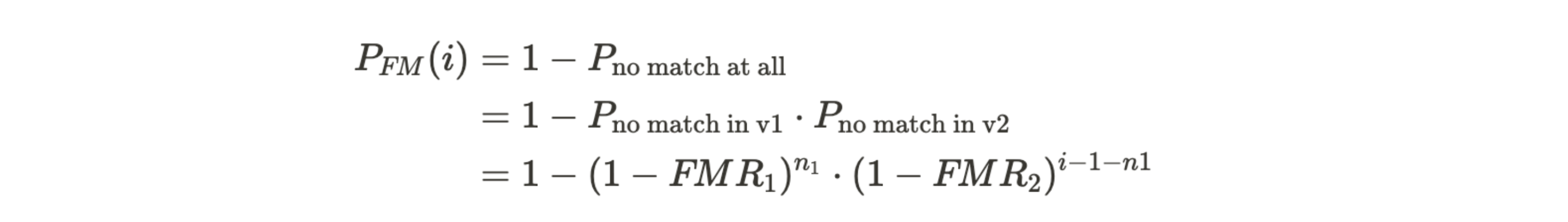

The probability for the i-th (legitimate) user to run into a false match error can be calculated by the equation

with being the false match rate. Adding up these numbers yields the expected number of false matches that have happened after the i-th user has enrolled, i.e the number of falsely rejected users (derivation).

A high false match rate significantly impacts the usability of the system, as the probability of false matches increases with a growing number of users in the database. Over time the probability of being (falsely) rejected as a new user converges to 100%, making it nearly impossible for new users to be accepted.

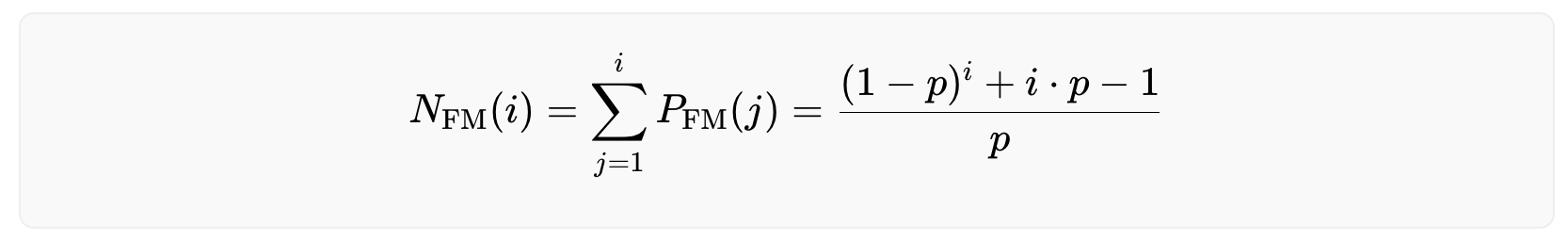

The following graph illustrates the performance of the biometric system using both the OR and AND rule. The graph is separated into two sections, with the left side representing the OR rule and the right side representing the AND rule. The top row of plots in the graph shows the probability of the i-th user being falsely rejected, and the bottom row of plots shows the expected number of users that have been falsely rejected after the i-th user has successfully enrolled. The different colors in the graph correspond to the three systems mentioned earlier: green represents Daugman’s system, blue represents NEC’s system, and red represents the initial worst case estimate.

The main findings from the analysis indicate that when using the OR-rule, the system's effectiveness breaks down with just a few million users, as the chance of a new user being falsely rejected becomes increasingly likely. In comparison, operating with the AND-rule provides a more sustainable solution for a growing user base.

Further, even the difference between the worst case and the best case estimate of current technology matters. The performance of biometric algorithms designed by Tools for Humanity has been continuously improving due to ongoing research efforts. This has been achieved by pushing beyond the state-of-the-art by replacing various components of the uniqueness verification process with deep learning models which also significantly improves the robustness to real world edge cases. At the time of writing, the algorithm's performance closely resembled the green graph depicted in the figure above when in an uncontrolled environment (depending on the exact choice of the FNMR). This is an accomplishment noteworthy in and of itself. Nonetheless, further improvements in the algorithm's performance are expected through ongoing research efforts. The optimum case is a vanishing error rate in practice on a global scale.

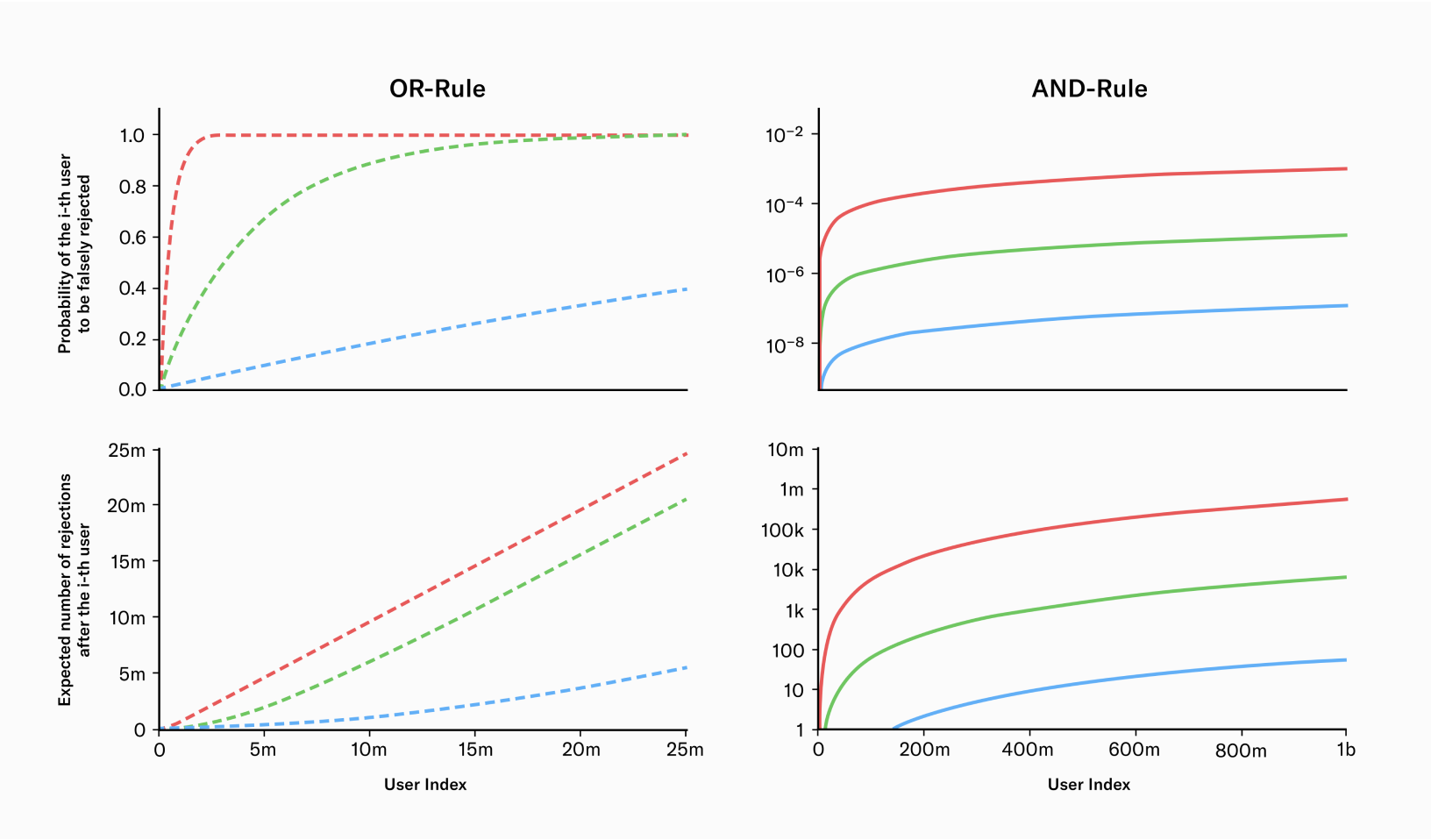

Note that for a large number of users (i≫1) and a very performant biometric system (p≪1) the equation above becomes numerically unstable. To calculate the number of rejected users for such a scenario, Taylor expand the critical part of the equation around small values of p.

The derivation of the above equation can be found here. Inserting this in the equation above yields

which is a valid approximation as long as

False Non Matches

When it comes to fraudulent users, the probability of them not being matched stays constant and does not increase with the number of users in the system. This is because there is only one other iris that can cause a false non-match - the user's own iris from their previous enrollment. Thus, the probability of encountering a false non-match is given by

The number of expected false non matches can be calculated with

with j indicating the j-th untrustworthy user who tries to fool the system.

Conclusion

The conclusion is that iris recognition can establish uniqueness on a global scale. Further, to onboard billions of individuals, the algorithm needs to use the AND-rule. Otherwise, the rejection rate will be too high and it will be practically impossible to onboard billions of users.

The current performance is already beyond the original conservative estimate and the project expects the system to eventually surpass current state-of-the-art lab environment performance, even if subject to an uncontrolled environment: On the one hand, the custom hardware comprises an imaging system that outperforms typical iris scanners by more than an order of magnitude in terms of image resolution. On the other hand, current advances in deep learning and computer vision offer promising directions towards a “deep feature generator” - a feature generation algorithm that does not rely on handcrafted rules but learns from data. So far the field of iris recognition has not yet leveraged this new technology.

Iris Feature Generation with Gabor Wavelets

The objective for iris feature generation algorithms is to generate the most discriminative features from iris images while reducing the dimensionality of data by removing unrelated or redundant data. Unlike 2D face images that are mostly defined by edges and shapes, iris images present rich and complex texture with repeating (semi-periodic) patterns of local variations in image intensity. In other words, iris images contain strong signals in both spatial and frequency domains and should be analyzed in both. Examples of iris images can be found on John Daugman's website.

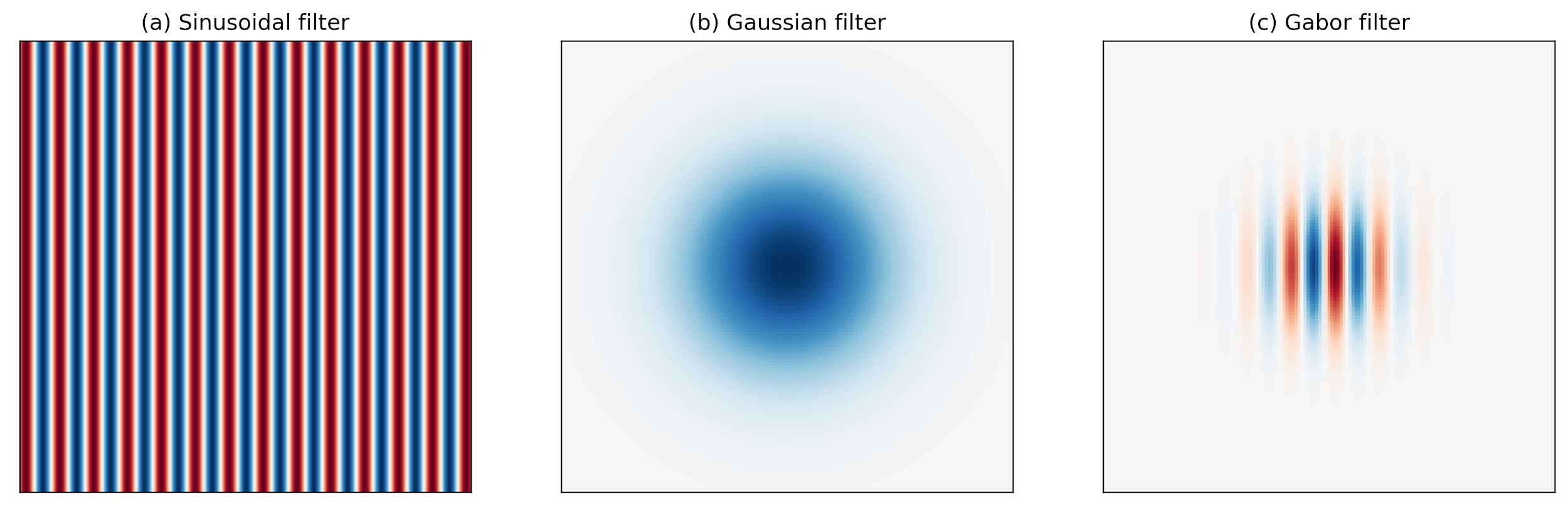

Gabor filtering

Research has shown that the localized frequency and orientation representation of Gabor filters is very similar to the human visual cortex’s representation and discrimination of texture. A Gabor filter analyzes a specific frequency content at a specific direction in a local region of an image. It has been widely used in signal and image processing for its optimal joint compactness in spatial and frequency domain.

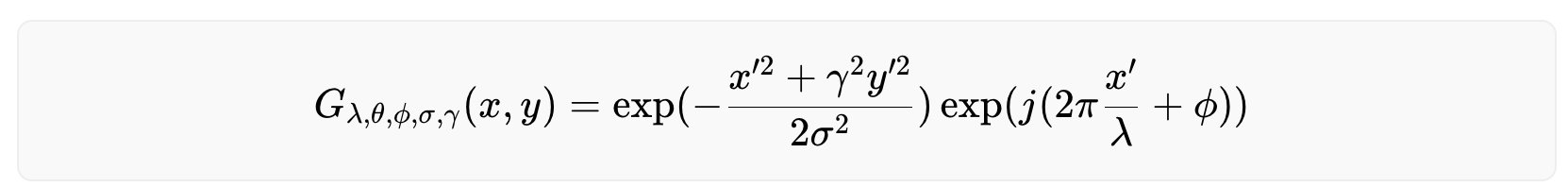

As shown above, a Gabor filter can be viewed as a sinusoidal signal of particular frequency and orientation modulated by a Gaussian wave. Mathematically, it can be defined as

with

Among the parameters, σ and γ represent the standard deviation and the spatial aspect ratio of the Gaussian envelope, respectively, λ and ϕ are the wavelength and phase offset of the sinusoidal factor, respectively, and θ is the orientation of the Gabor function. Depending on its tuning, a Gabor filter can resolve pixel dependencies best described by narrow spectral bands. At the same time, its spatial compactness accommodates spatial irregularities.

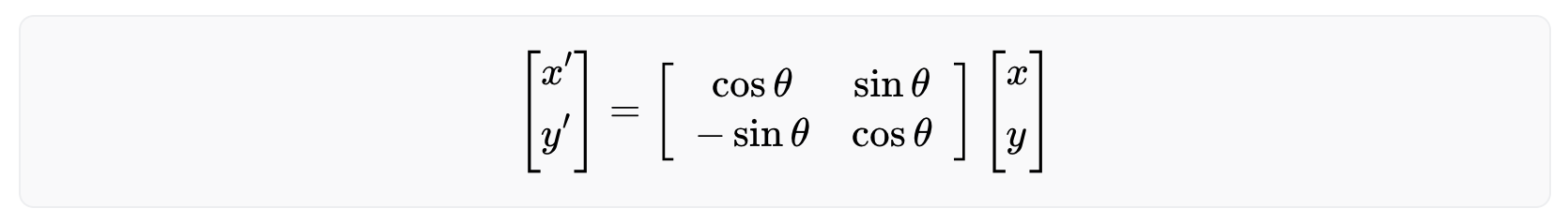

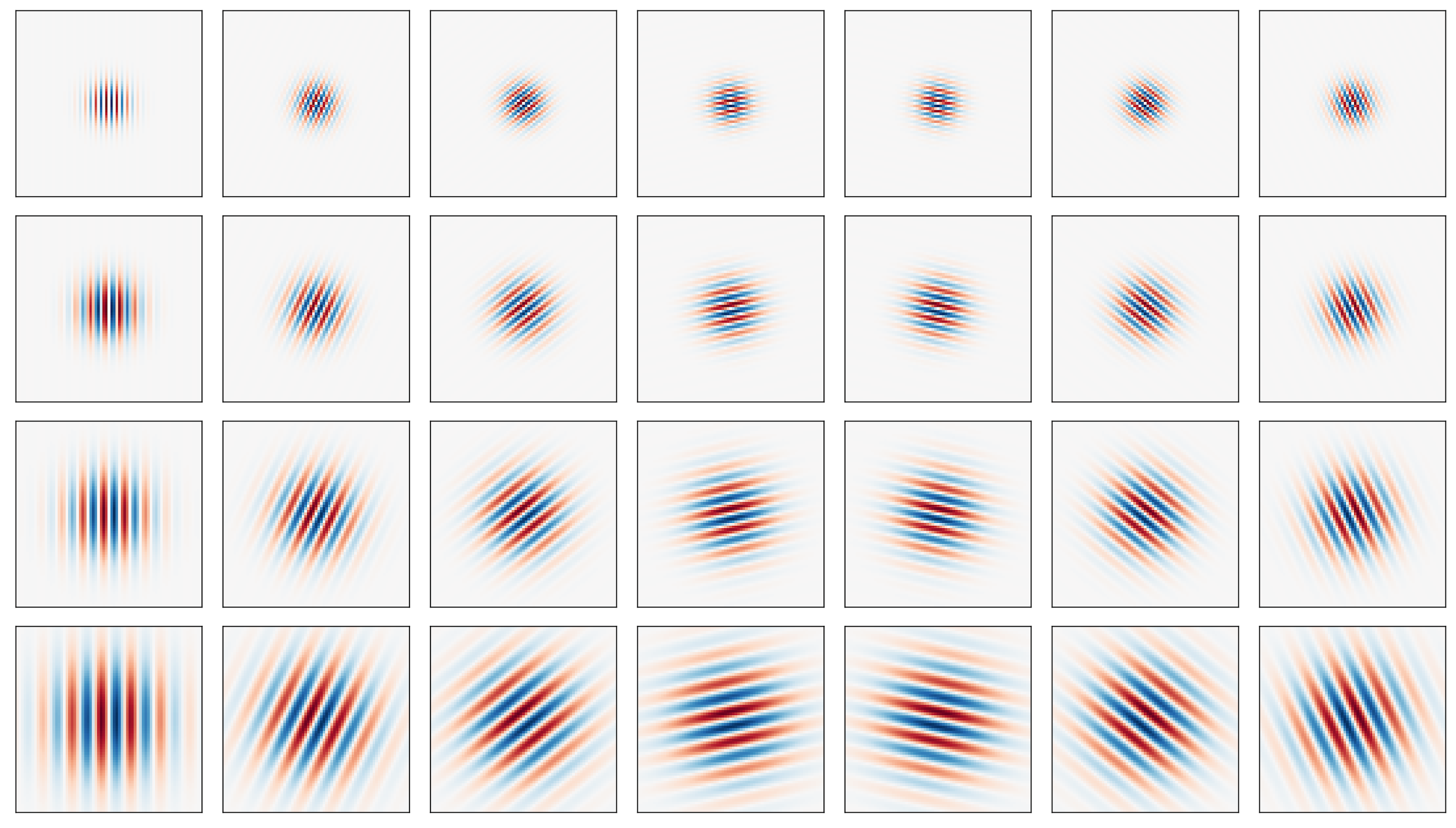

The following figure shows a series of Gabor filters at a 45 degree angle in increasing spectral selectivity. While the leftmost Gabor wavelet resembles a Gaussian, the rightmost Gabor wavelet follows a harmonic function and selects a very narrow band from the spectrum. Best for iris feature generation are the ones in the middle between the two extremes.

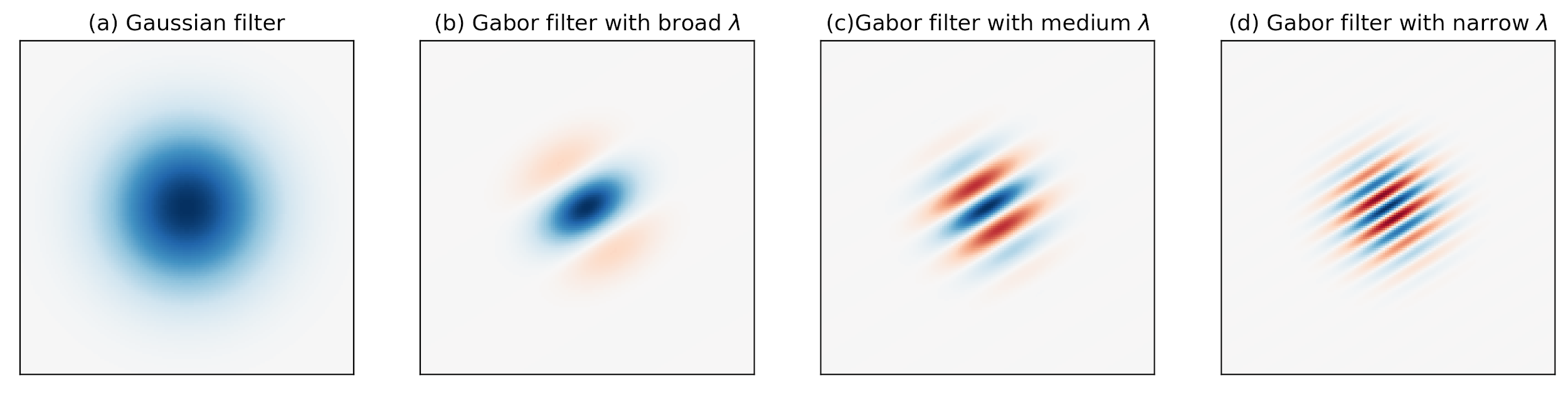

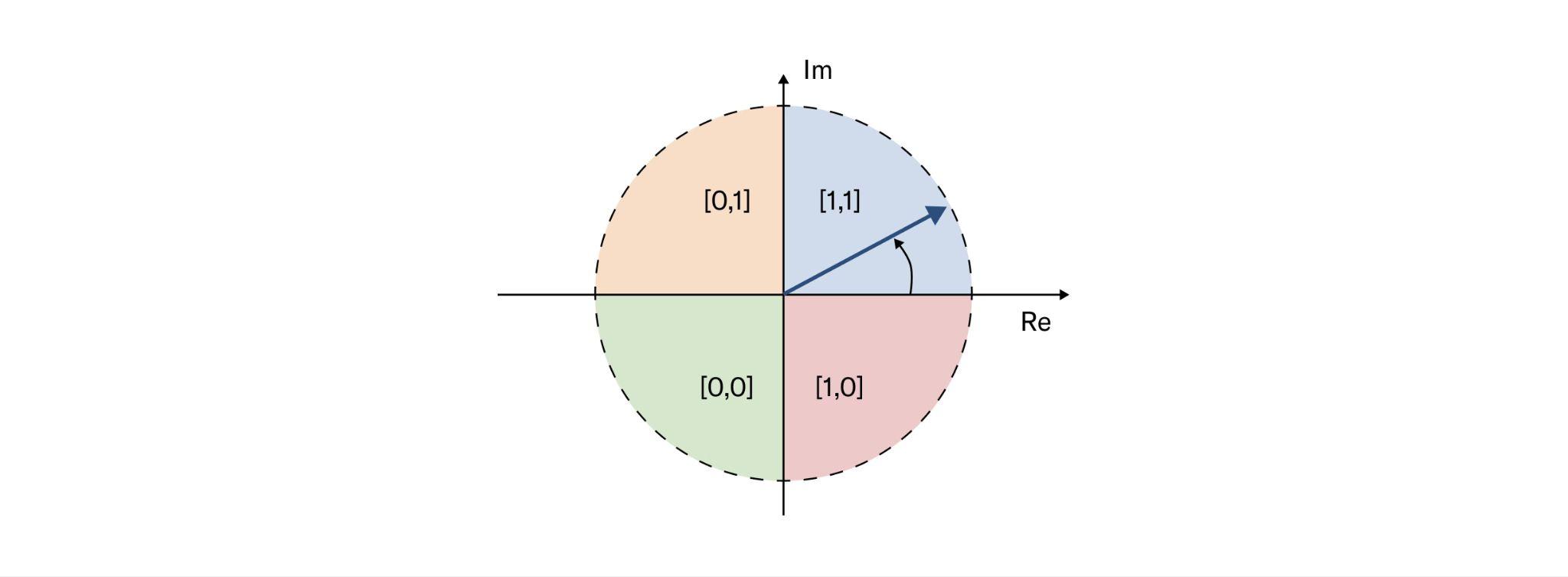

Because a Gabor filter is a complex filter, the real and imaginary parts act as two filters in quadrature. More specifically, as shown in the figures below, (a) the real part is even-symmetric and will give a strong response to features such as lines; while (b) the imaginary part is odd-symmetric and will give a strong response to features such as edges. It is important to maintain a zero DC component in the even-symmetric filter (the odd-symmetric filter already has zero DC). This ensures zero filter response on a constant region of an image regardless of the image intensity.

Multi-scale Gabor filtering

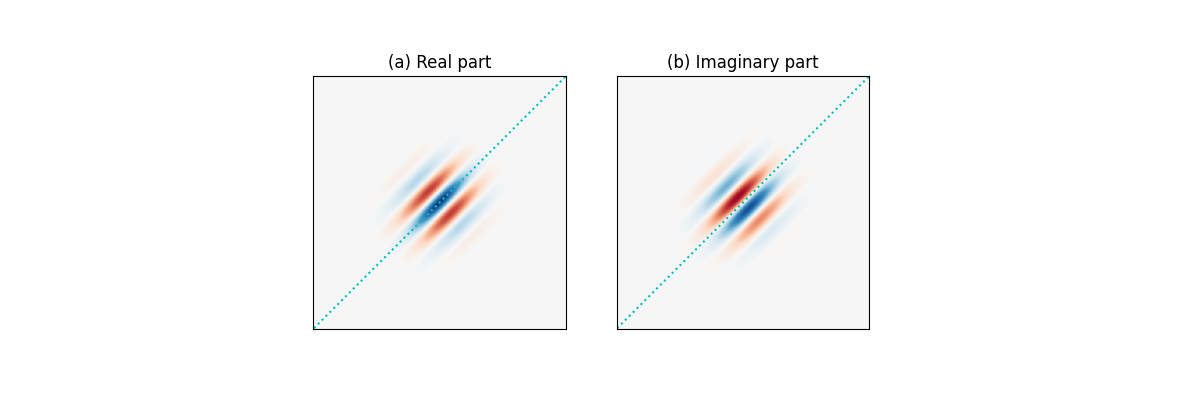

Like most textures, iris texture lives on multiple scales (controlled by ). It is therefore natural to represent it using filters of multiple sizes. Many such multi-scale filter systems follow the wavelet building principle, that is, the kernels (filters) in each layer are scaled versions of the kernels in the previous layer, and, in turn, scaled versions of a mother wavelet. This eliminates redundancy and leads to a more compact representation. Gabor wavelets can further be tuned by orientations, specified by . The figure below shows the real part of 28 Gabor wavelets with four scales and 7 orientations.

Phase-quadrant demodulation and encoding

After a Gabor filter is applied to an iris image, the filter response at each analyzed region is then demodulated to generate its phase information. This process is illustrated in the figure below, as it identifies in which quadrant of the complex plane each filter response is projected to. Note that only phase information is recorded because it is more robust than the magnitude, which can be contaminated by extraneous factors such as illumination, imaging contrast, and camera gain.

Another desirable feature of the phase-quadrant demodulation is that it produces a cyclic code. Unlike a binary code in which two bits may change, making some errors arbitrarily more costly than others, a cyclic code only allows a single bit change in rotation between any adjacent phase quadrants. Importantly, when a response falls very closely to the boundary between adjacent quadrants, its resulting code is considered a fragile bit. These fragile bits are usually less stable and could flip values due to changes in illumination, blurring or noise. There are many methods to deal with fragile bits, and one such method could be to assign them lower weights during matching.

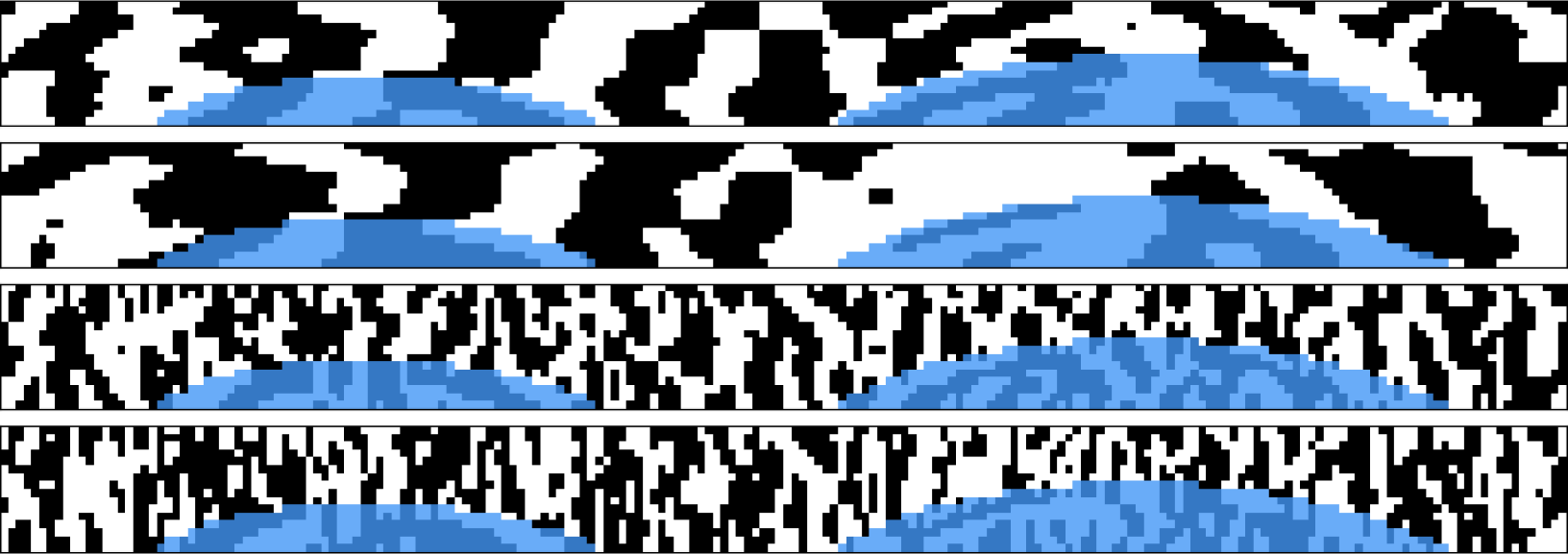

When multi-scale Gabor filtering is applied to a given iris image, multiple iris codes are produced accordingly and concatenated to form the final iris template. Depending on the number of filters and their stride factors, an iris template can be several orders of magnitude smaller than the original iris image.

Robustness of iris codes

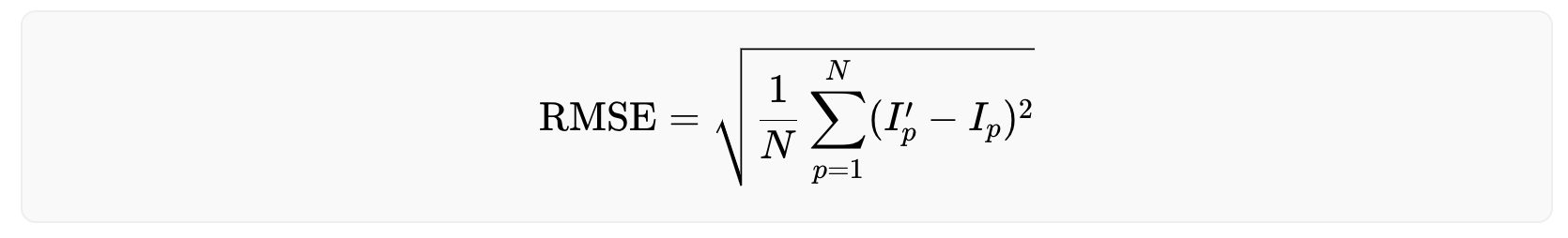

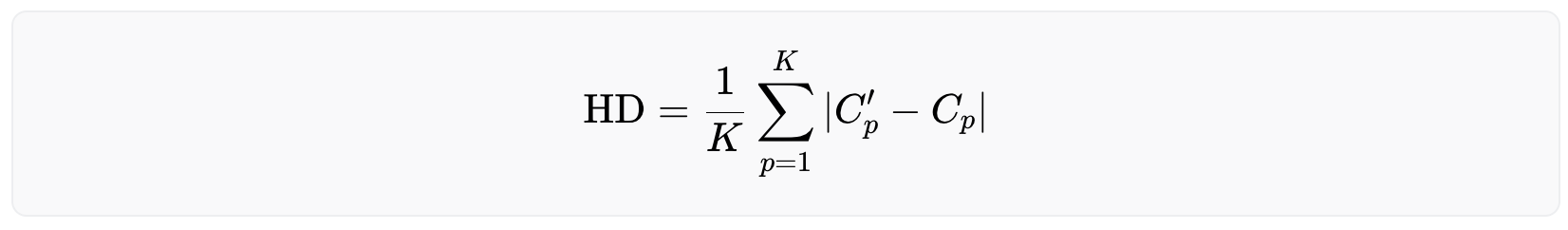

Because iris codes are generated based on the phase responses from Gabor filtering, they are rather robust against illumination, blurring and noise. To measure this quantitatively, each effect is added, namely, illumination (gamma correction), blurring (Gaussian filtering), and Gaussian noise to an iris image, respectively, in slow progression and measure the drift of the iris code. The amount of added effect is measured by the Root Mean Square Error (RMSE) of pixel values between the modified and original image, and the amount of drift is measured by the Hamming distance between the new and original iris code. Mathematically, RMSE is defined as:

where N is the number of pixels in the original image I and the modified image I′. The Hamming distance is defined as:

where K is the number of bits (0/1) in the original iris code C and the new iris code C′. A Hamming distance of 0 means a perfect match, while 1 means the iris codes are completely opposite. The Hamming distance between two randomly generated iris codes is around 0.5.

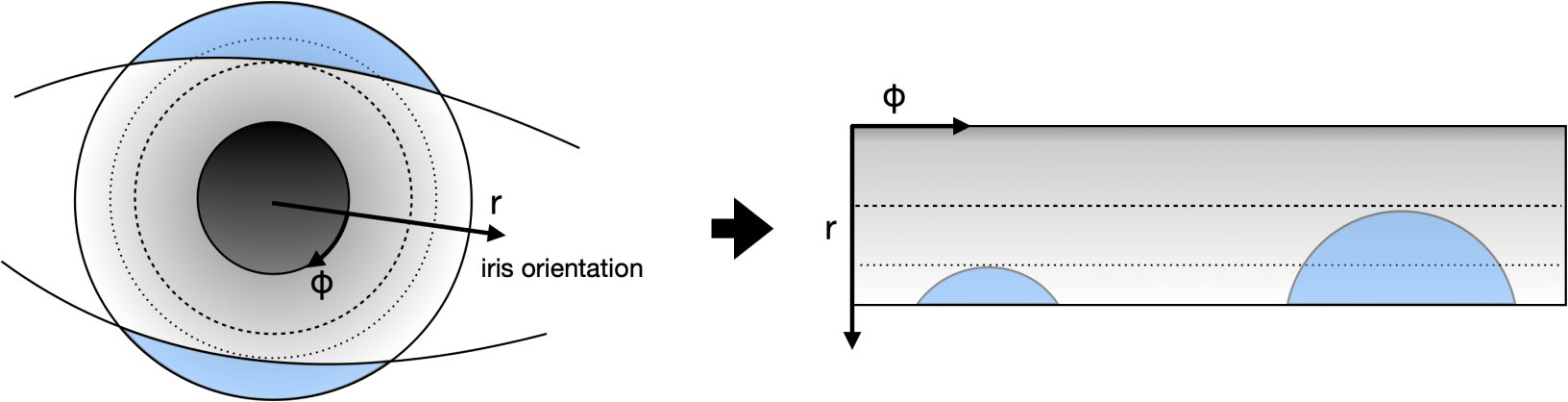

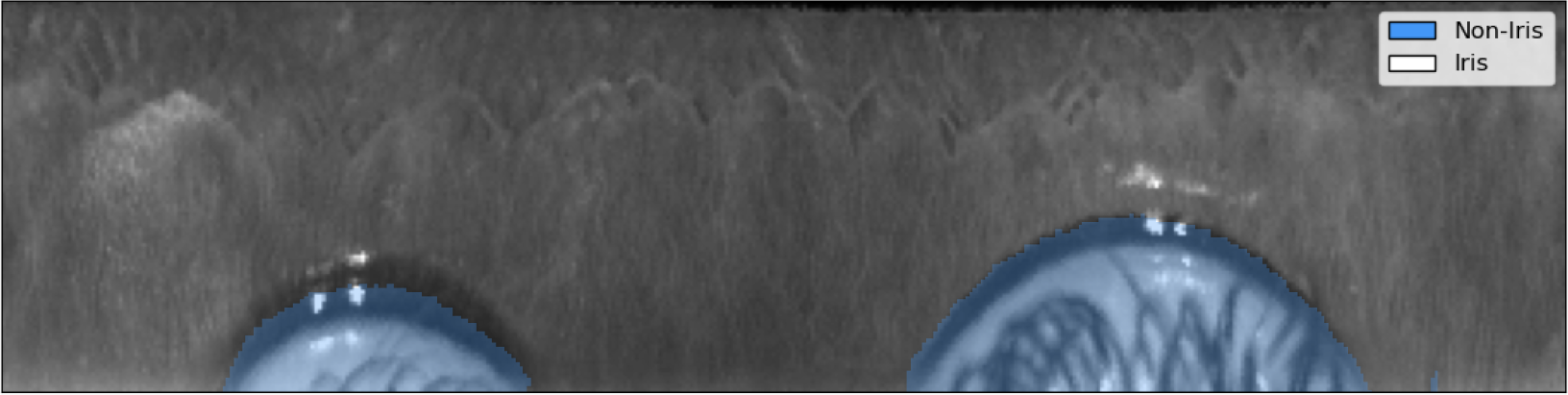

The following figures help explain the impact of illumination both visually and quantitatively, blurring and noise on the robustness of iris codes. For illustration purposes, these results are not generated with the actual filters that are deployed but nevertheless demonstrate the property in general of Gabor filtering. Also, the iris image has been normalized from a donut shape in the cartesian coordinates to a fixed-size rectangular shape in the polar coordinates. This step is necessary to standardize the format, mask-out occlusion and enhance the iris texture.

As shown in the figure below, iris codes are very robust against grey-level transformations associated with illumination as the HD barely changes with increasing RMSE. This is because increasing the brightness of pixels reduces the dynamic range of pixel values, but barely affects the frequency or spatial properties of the iris texture.

Blurring, on the other hand, reduces image contrast and could lead to compromised iris texture. However, as shown below, iris codes remain relatively robust even when strong blurring makes iris texture indiscernible to naked eyes. This is because the phase information from Gabor filtering captures the location and presence of texture rather than its strength. As long as the frequency or spatial property of the iris texture is present, though severely weakened, the iris codes remain stable. Note that blurring compromises high frequency iris texture, therefore, impacting high frequency Gabor filters more, which is why a bank of multi-scale Gabor filters are used.

Finally, observe bigger changes in iris codes when Gaussian noise is added, as both spatial and frequency components of the texture are polluted and more bits become fragile. When the iris texture is overwhelmed with noise and becomes indiscernible, the drift in iris codes is still small with a Hamming distance below 0.2, compared to matching two random iris codes (≈0.5). This demonstrates the effectiveness of iris feature generation using Gabor filters even in the presence of noise.

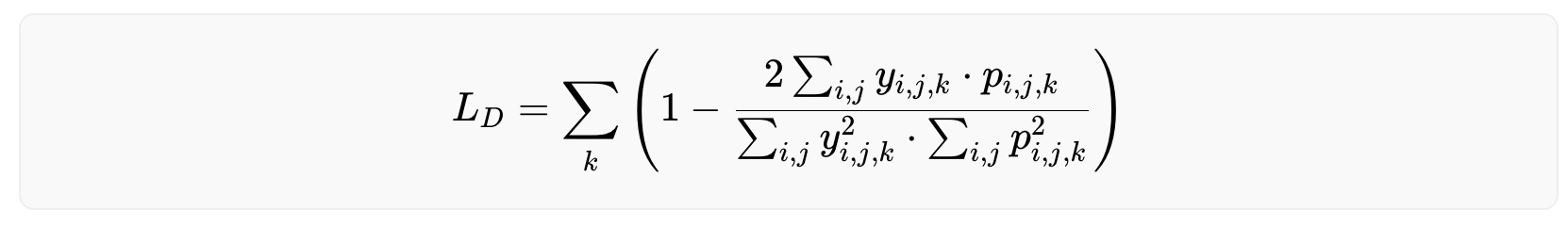

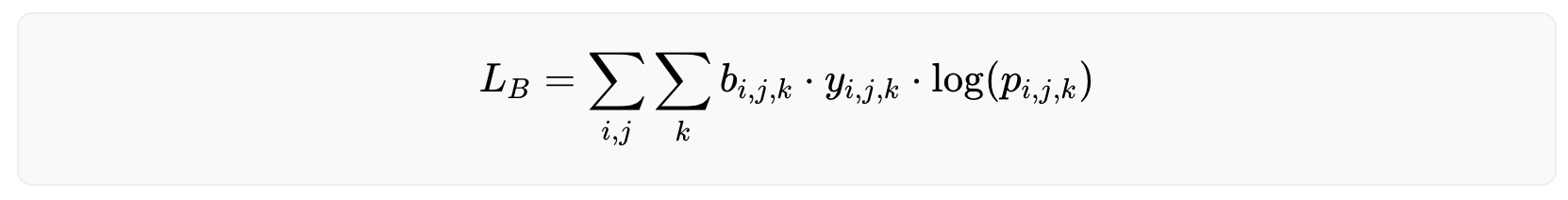

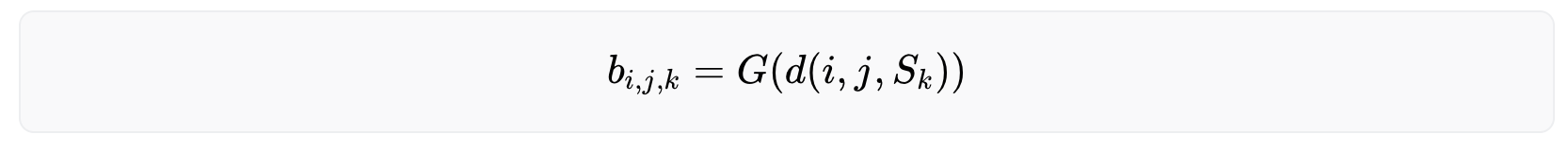

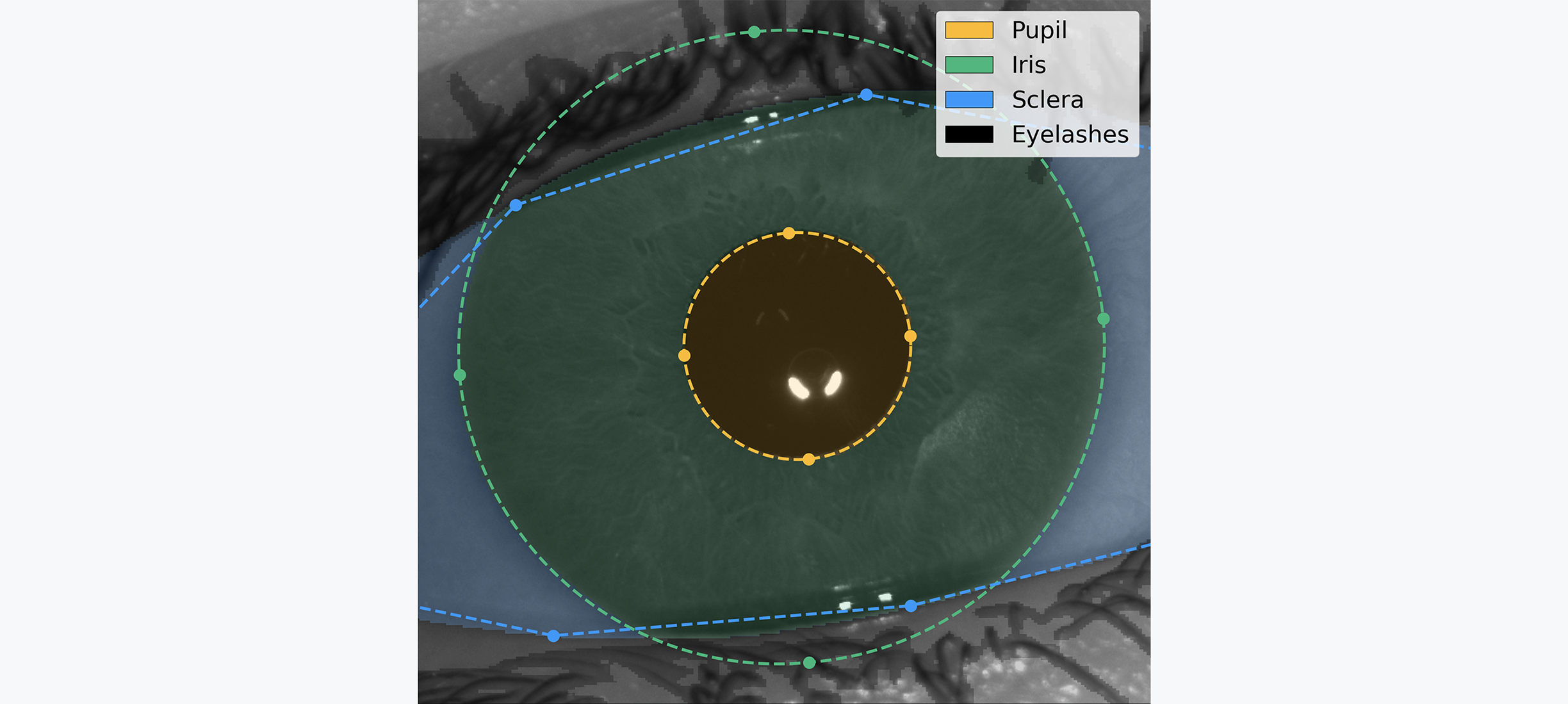

Conclusion